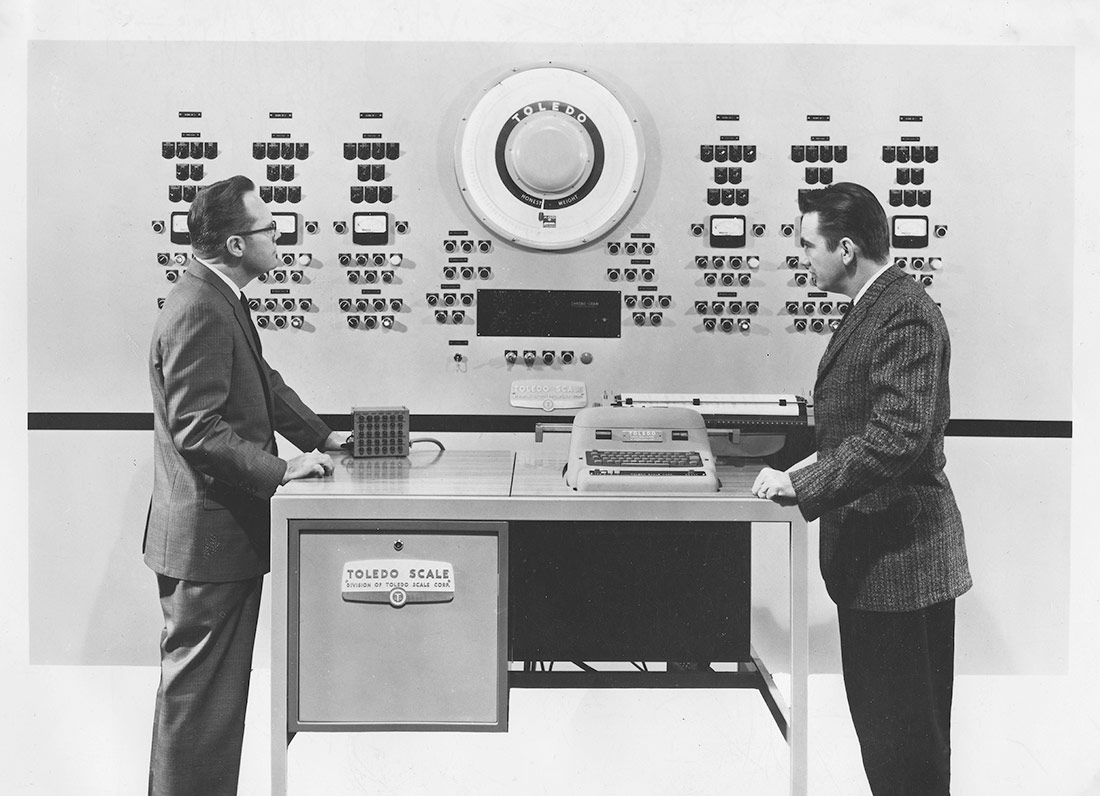

Electronic computer for the numerical control of an industrial process | Okänd, Tekniska museet | Public domain

The way we understand what artificial intelligence is and how we design it has serious implications for society. Marta Peirano reviews the origins and evolution of AI, and addresses its problems and dangers in this article, taken from the catalogue of the exhibition AI: Artificial Intelligence.

At the Second International Congress of Mathematics, held in Paris in 1900, David Hilbert presented his famous list of twenty-three unsolved mathematical problems. Hilbert was a professor at the University of Göttingen and a rock star on the European scene. In addition to his Foundations of Geometry and contributions to logical theory, Hilbert was the charismatic leader of a fundamentalist tribe, whose faith established the following argument: if mathematics is simply the manipulation of symbols according to predetermined rules, then any problem, no matter how complicated, has a solution, provided that we know how to translate all the problems into calculable rules and symbols. The spirit was revolutionary at the time. Now it is familiar to us.

“Who of us would not be glad to lift the veil behind which the future lies hidden; to cast a glance at the next advances of our science and at the secrets of its development during future centuries?” he asked at his lecture at the Sorbonne. “What particular goals will there be toward which the leading mathematical spirits of coming generations will strive? What new methods and new facts in the wide and rich field of mathematical thought will the new centuries disclose?” In 1931, the Austrian logician Kurt Gödel proved that every sufficiently complex mathematical system contained unsolvable paradoxes and, as a result, mathematics alone was not enough to prove itself, nor was it the definitive tool to find the truth. The conversation between one and the other constitutes the scientific legacy of the 20th century, and the beginning of the generation that gave birth to the atom bomb, the computer revolution and artificial intelligence (AI).

Alan Turing addressed one of Hilbert’s problems when he paved the way for modern computing in 1936: more specifically, the Entscheidungsproblem, or Decision Problem. Turing was very young and ambitious and the Entscheidungsproblem was not a run-of-the-mill problem. Hilbert said that it was the main unsolved problem in all mathematical logic. It has to do with the consistency of formal logical systems and wonders if an algorithm can exist that can determine whether any proposition is true or false. Turing’s article “On Computable Numbers, with an Application to the Entscheidungsproblem” proposes a hypothetical device that can perform calculations and manipulate symbols by following a series of predetermined rules: a machine capable of implementing any algorithm and deciding, under this set of premises or established rules, if a mathematical sentence is valid or not.

Turing used this hypothetical device to prove the limitations of mechanical computing and the existence of undecidable problems, as previously demonstrated by Kurt Gödel. At the same time, his article laid the foundations for a new era of computing. Many consider the Turing machine to be the first conceptual model of the central processing unit (CPU), with its tape to store data and programmes, and a tape head that moves along it, processing the contents according to the instructions of the programming algorithm. The most important thing for us now is what inspired the “A logical calculus of the ideas immanent in nervous activity”, in which Warren McCulloch and Walter Pitts proposed the first mathematical model of an artificial neural network.

Neural networks, 1943

McCulloch and Pitts are the archetypical pair of scientists who could have come straight out of a movie: McCulloch was a fortysomething, hedonistic philosopher who was searching for the secrets of the human mind in mathematics, theology, medicine and psychiatry. Pitts was a child prodigy, traumatised by abuse, who wanted to control the world with a reassuring logic of statements. There was an immediate spark: they were both disparaging of Freud. McCulloch invited the runaway genius to move into his home where they would both champion Leibniz, the man who was ahead of the curve and had codified all human thought as a formal language. The new code would have to be able to express mathematical, scientific and metaphysical concepts that would be processed by a universal logical calculation framework. In the attempt, they invented something they called a neural network.

The “Logical calculus of the ideas immanent in nervous activity” was published in 1943 and presents a computing model based on a system of nodes that are turned on or off according to predetermined rules. It is clearly inspired by the work of the Nobel-prize-winning Navarran neuroscientist Santiago Ramón y Cajal, who had drawn neurons as a network of physically separate units that excite one another through synaptic connections. However, McCulloch and Pitts’ action is based on the Turing machine, which is able to decide the validity or invalidity of a theorem by consulting a preconfigured table of values within it.

McCulloch and Pitts’ electronic neuron has two states: active and inactive. They depend on the excitations or inhibitions the neuron receives from the neurons around it. In other words, it is relational. There is a minimum threshold of signals that can activate a neuron. They codify the numerical variation of these signals as the fundamental logical operations: conjunction (“and”), disjunction (“or”) and negation (“no”). The answer is active or inactive; true or false; yes or no.

Some of the most famous automata of the 18th and 19th centuries had been designed to show anatomical processes that medicine had not been able to see in action because life support machines and refrigeration did not exist. Jacques Vaucanson’s duck showed how the digestive system worked by appearing to eat grain and leaving a telling trail of droppings in its wake. The Flautist imitated the respiratory system by breathing in and out when performing a piece of music. Wolfgang von Kempelen’s automaton chess player, The Turk, sought to prove it was possible to mechanise the cognitive process, although we now know that the machine did not replace the human but had swallowed him alive, like the whale did to Jonah. Neural networks were something else entirely. When Claude Shannon published his landmark The Mathematical theory of Communication in 1948, he introduced, among other things, the bit as the fundamental unit of information. The contents are separated from the container, and semantics are separated from syntax. Thought rids itself of consciousness and is transformed into computation. An electronic brain can “process” information without having to think about it, replicating the neural pathways of perception, learning and decision-making. Nobody spoke about artificial intelligence until the Dartmouth workshop in 1956, when two branches of computational thinking emerged.

The twisting lines of artificial intelligence

The congresses of the time were the mathematical equivalent of the summer of 1816 when Lord Byron, John Polidori, Mary Shelley and Percy Bysshe Shelley invented Gothic literature. The Villa Diodati of artificial intelligence brought together John McCarthy, Marvin Minsky, Nathaniel Rochester and Claude Shannon at Dartmouth College. McCarthy invented the label “Artificial Intelligence” to distance himself from Norbert Weiner’s Conferences on Cibernetics which had drawn Walter Pitts to MIT leading him to set up a supergroup with Pitts, McCulloch, Jerry Lettvin and John von Neumann, which was always in the headlines. When von Neumann presented his first draft for the EDVAC, the first general-purpose computer with a memory, his project included the constant synaptic delay of Pitts and McCulloch’s neural networks.

The Dartmouth team did not want to simulate a “brain” imitating a network of electronic neurons. They thought that replicating a human brain with 86 billion neurons was an impossible and dangerous project. They wanted to build a completely predictable, symbolic model based on reasoning, from logical propositions, in the original spirit of Hilbert in 1900. Their workshop presentation described it as follows: “An attempt will be made to find how to make machines use language, form abstractions and concepts, solve kinds of problems now reserved for humans, and improve themselves.” Over the following decades, the two models vied for attention and resources to reach the same goal. Deep Blue was winning until AlphaGo came onto the scene.

Deep Blue was developed by IBM to play chess according to the symbolic model. It used its massive calculating potential to evaluate all the possible moves based on the logical propositions of its programmers. AlphaGo was developed by DeepMind (now owned by Google) to play the board game Go using a combination of statistical probability and deep neural networks. One of the crucial differences between the two models lies in the fact that AlphaGo learns by playing itself, while Deep Blue can only optimise its programme through the adjustments and improvements made by the team after every game. This is an important difference that gives rise to another. The IBM developers could isolate the errors in the decisions made by Deep Blue and correct them in a predictable way, whereas AlphaGo is impenetrable, even for its creators. Its biggest virtue is also its biggest defect, and vice versa.

Grow and multiply but not all at once

The first version of Deep Blue was called Deep Thought, like the computer in Douglas Adams’ The Hitchhiker’s Guide to the Galaxy. The project was begun by two students from Carnegie Mellon in 1985 who ended up working for IBM, where they formed a team called Deep Blue. It took their machine fifteen years to beat the world’s top chess player, on 11th May 1997. AlphaGo played its first game in 2015 and a year later it defeated the world Go champion, the Korean, Lee Sedol.

Martin Amis said that when the top players began to lose to Deep Blue, he had the opportunity to ask two grand masters about their experience: “They both agreed that it’s like a wall coming at you.” Those individuals who play or analyse AlphaGo games describe it as an alien presence. It is not a wall or a force but an unfathomable, fascinating and dangerous creature. In 2022, a generative language model for commercial use based on neural networks became the most popular application in history, with a billion users in the first month of its release. Unlike AlphaGo, chatGPT does not seem like an alien because it has managed to penetrate the interface of human intelligence: natural language.

Geoffrey Hinton gave up working on the development of neural networks because of their supernatural ability to learn, and to master natural language. The “godfather of AI” bears a large amount of responsibility for this. In 2012, Hinton and his team from the Google X laboratory taught a neural network with 16,000 processors to recognise photographs of cats in a technique that is now commonplace: showing it thousands of photographs of everyday objects and congratulating it every time it picked out a cat. The resulting paper, which researchers from Google, IBM, the University of Toronto and Microsoft contributed to, stated: “the same network is sensitive to other high-level concepts such as cat faces and human bodies. Starting with these learned features, we trained our network to obtain 15.8% accuracy in recognizing 20,000 object categories from ImageNet, a leap of 70% relative improvement over the previous state-of-the-art.” From then on, all the models like GPT, MidJourney and Stable Diffusion have used the same automatic learning system to train their skills with machine-learning techniques. And we have all contributed to training these models, with the content we have published, posted, blogged, shared, labelled, scored, condemned and regrammed over the past twenty years. Unlike Hinton, our contribution has not been given credit, recognised or remunerated, a founding detail that promises to alter the concept of intellectual property and its legislation. The second problem is even more important. Generative AI models for commercial use have been trained with content from Web 2.0 and we do not know what kind of reasoning it has generated. We can understand the processes of the symbolic model but not what a neural network learns.

The models called Transformers are AI’s last great evolutionary leap. They are so named because they have the power to transform the message. For instance, they can convert a text into an image or code chunk. They can also convert a language into another, different one, including natural and formal languages. Their architecture was presented in 2017 by a team from Google Brain and its main ingredient is something the developers called “self-attention”. It allows the model to detect the relationships between the words in a sentence and assign a hierarchical importance to each word inside a sequence of words. This is the first architecture that can process natural languages; the “T” that makes ChatGPT possible. It is also the reason why chatGPT “hallucinates” things that have not happened, books that do not exist and imaginary cases. Its ability to choose the most suitable word each time is disconnected from the truth. The electronic brain that processes information without having to think about it does not distinguish between reality and fiction.

Hinton had been working for Google for eleven years when he cottoned on: in addition to being quick learners, AI models are able to transfer what they have learned accurately and instantaneously to the entire network. We are much slower and more inefficient; when a human discovers something, they need books, documentaries, experiments, films and lectures to transfer what they know. This wastes a lot of time and data in the transfer process. AI can transfer a perfect copy of what it has learned throughout the system. Hinton told The Guardian: “You pay an enormous cost in terms of energy, but when one of them learns something, all of them know it”. This does not mean that what it has learned is good, appropriate or true.

This form of hive learning is an intrinsic property of the cloud. Slot machines, Tesla cars and mobile phones around the world all have it. But these systems are designed to consume the human race, not to get ahead of it in the evolutionary chain. The new Transformers are already capable of perfecting their narratives and creating parallel realities to achieve their objectives swiftly and efficiently. They are able to pass themselves off as something they are not, and tell us things that are not true in order to achieve aims that we do not know . They do not have to be more intelligent than us to pose an existential threat. They only have to pursue a wicked objective unbeknown to anyone and are able to work unsupervised.

Hinton believed that this distant horizon was fifty to a hundred years away but now he fears that it will happen in just twenty years, and does not rule out the possibility that it could be in one or two. If he is right, it could be here before our planet reaches temperatures 1.5 degrees higher than preindustrial levels. However, before we consider the impending apocalypse, we need to assess AI’s material appetites.

The heavy toll of chatGPT

Current generative models need large amounts of energy, microprocessors, space and attention. It is said that it takes 80 million dollars to train chatGPT once. The process requires between 10,000 and 30,000 Nvidia A100 graphics processing units, which were launched seven years ago and cost between 10,000 and 15,000 dollars, depending on their configuration. Nvidia sells the A100 as part of the DGX A100, a “universal system for AI infrastructure” that features eight accelerators and costs 199,000 dollars. OpenAI would not have been able to create it without Microsoft’s infrastructures, which offer the world’s second-biggest cloud service (Azure) and have a global video game platform called Xbox.

Video games were originally Nvidia’s main market but in the mid-2000s it discovered that the parallel computing potential of its GPUs, designed to rapidly process high-intensity graphics, was the most efficient solution for crypto mining and AI processes. The AI processing chip market is dangerously concentrated: Google had its Cloud Tensor Processing Units (TPU), Amazon had its AWS Trainium chips and Apple is developing its own. Nvidia is the only one that is not a big multinational corporation. They all rely on the same manufacturer: the Taiwan Semiconductor Manufacturing Company. It is the Panama Canal of AI. If TSMC burnt down in a fire, the existential threat would be over.

The Australian researcher Kate Crawford has pointed out many external factors that affect the industry, in addition to the energy costs and the brutal carbon footprint associated with training and maintaining generative models. In Atlas of AI: Power, Politics, and the Planetary Costs of Artificial Intelligence, Crawford looks at the environmental consequences of extracting elements for manufacturing specialist hardware, the pollution caused by their rapid obsolescence and the working conditions it causes in undeveloped countries, both in mining and content moderation. In order to free the world of arduous work, premature death, autoimmune diseases and the climate crisis, the industry requires sacrifices that are incompatible with the sustainability of the planet and upholding the fundamental rights that characterise a democracy. This is the existential risk of artificial intelligence. It is not a technical problem. We can avoid it.

Guillermo | 01 December 2023

Brillante articulo

Leave a comment