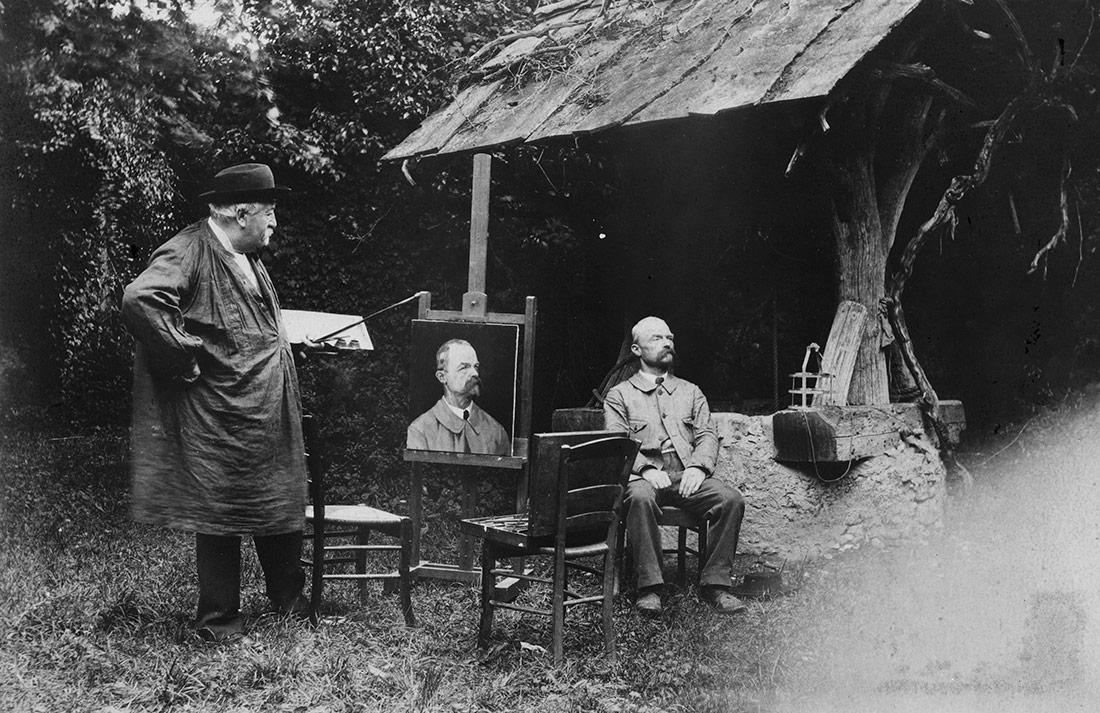

Antoine Lumière painting portrait of posing man, 1905 | Library of Congress | No known copyright restrictions

All drawings represent what we see, what we imagine or what we remember. Or so John Berger, one of the best known and most respected art critics of recent decades, understood it. If he was right, what do works made by artificial intelligence symbolise? Does a machine understand what it sees, is it capable of fantasising, does it have memory?

Berger on Drawing is a collection of texts by the writer, art critic and social theorist John Berger (London, 1926 – Paris, 2017). In the book, he reflects on a range of topics including the difficulty of depicting reality, art as communication and the relationship between object and artist. In one of his essays, he says: “There are drawings that study and question the visible, those which put down and communicate ideas, and those done from memory”, and continues, “Each type of drawing speaks in a different tense.” Present, conditional, past.

We should ask ourselves whether Berger’s thesis would change in light of recent advances in artificial intelligence (AI) applied to creativity. Since the second half of 2022, a number of applications that enable the creation of surprisingly high-quality images have made a splash in internet culture. The most famous are DALL·E 2, Midjourney and Stable Diffusion, although they are all part of a broad category known as “generative AI”. Machines are beginning to conceive pieces with a certain sense of meaning and beauty, but – going back to Berger – where does it come from? What do they see, imagine or remember?

Present

“For the artist drawing is discovery. And that is not just a slick phrase, it is quite literally true. It is the actual act of drawing that forces the artist to look at the object in front of him, to dissect it in his mind’s eye and put it together again”. For Berger, drawing is “knowing by hand”, a process in which the artist looks so closely at what is in front of him that object and artist merge into one.

Considering where we are at, it is not surprising that a computer is capable of portraying what is in front of it. For more than a decade now, there have been many experiments by artists, industry or both that have played around with robots capable of drawing on canvas or paper. To cite just a couple of examples, in 2016 the agency Visionaire and Cadillac created ADA0002, an assembly line robot that was able to draw visitors to an exhibition in New York in real time. Meanwhile, artist Patrick Tresset has spent more than a decade developing intentionally rustic robots capable of sketching portraits and still lifes from different points of view.

Machines can represent what is visible, but do they really see? It sounds like a ridiculous thing to remind ourselves of, but AIs don’t have eyes or other sensory organs, so they obviously can’t see in the same way that humans do. To emulate this ability, they need to use cameras and sensors to identify what is around them. This allows them to do amazing things, like recognising faces or physical movements, but they are programs trained on the basis of certain patterns, so a change in these patterns (for example, a face that is half hidden or upside down) can go unnoticed. In lay terms, this is why reCAPTCHA, the system for proving we are not a robot, asks us to identify traffic lights. It’s a task that Google Street View’s AI has already done, but we need to confirm that it has got it right.

We don’t know whether machines will be able to develop near-human vision in the future, but in creative terms it would be a leap to equate their gaze with that of the artist. As Berger said, in capturing reality, people reflect both their outer and inner worlds, in a tension-filled dialogue that seems far removed from the way in which today’s AIs work.

Conditional

The second type of drawing is, for Berger, that which wells from the imagination: “Such drawings are visions of ‘what would be if’”. Recent developments in AI seem to fit better with this second category: that which comes from inventing, fantasising, playing with unsuspected connections. It is in this direction that the applications themselves wish to move, as it is the type of creation that best demonstrates their ability to blend concepts, techniques, attributes, artistic styles, etc. It is not for nothing that the text command to activate Midjourney is /imagine followed by a description of what you want to depict.

For Berger, in this conditional drawing, “it is now a question of bringing to the paper what is already in the mind’s eye. Delivery rather than emigration”. The logic of generative AI is the same: based on a command in the form of words or phrases (what are known as “prompts”), the program delivers what is asked of it. The level of refinement of these instructions will determine how satisfactory the results are, which has led to prompt guides, tools for refining prompts and even a marketplace for buying and selling them.

This mechanism reflects one of the main characteristics of today’s generative AIs: to ensure that they work properly, they need human intervention both at the beginning and at the end of the process. Developers feed in data sets and training parameters, which they then refine by reviewing and correcting the results over and over again. The same is true once they are up and running: the user enters a prompt and then decides whether the results are suitable or need to be repeated or refined. In short, AI is perfectly capable of executing work on its own, but for it to be relevant, human direction and assessment are still needed.

This understanding of generative AI as a tool is essential if we wish to defuse certain debates. One might think that DALL·E 2 is performing nothing short of magic when it paints a scene around the Girl with the Pearl Earring, but it is only able to do so because it has been provided with all the works of Johannes Vermeer and has honed – with an inevitable degree of subjectivity – what it means to paint like the Dutch artist. Could it learn the style of new artists without help and even generate a style of its own? It probably could, as today’s AI technology already permits machine learning methods that include monitoring of the results by the machine itself. What is less clear is whether, without specifying certain rules and without human involvement at some points in the process, the images it would come up with would have any value or even meaning in the eyes of humans

Another question, in philosophical terms, is who the agent of this creative process would be. In The Creativity Code, Marcus du Sautoy notes: “Art is ultimately an expression of human free will, and until computers have their own version of this, art created by a computer will always be traceable back to a human desire to create”. And while the British mathematician does believe that AIs can be creative, he asks: “If someone […] presses the ‘create’ button, who is the artist?”

Past

Thirdly, there are those sketches that are made from memories. In the same way that to portray the present is to discover it, to draw from memory means that the artist must “dredge his own mind, to discover the content of his own store of past observations”. In this case, the artist neither studies reality nor imagines an alternative, “the drawing simply declares: I saw this. Historic past tense”.

In the same way that AIs “see”, although not fully, they can be said to have memory, but one that differs from human memory. AIs are trained using vast datasets containing far more information than a person could absorb. The machine processes and selectively consults them to respond to the commands it has been given. During this process, the program’s learnings are also stored, which in turn generates new “memories”.

For Berger, however, the best drawings created from memory “are made in order to exorcise a memory which is haunting, in order to take an image once and for all out of the mind and put it on paper”. Generative AI certainly does not have to deal with these kinds of concerns, but it is worth noting that the development of computers capable of identifying emotions and responding accordingly is a growing sector with relevance to care for dependent people, and one that has also been explored in the world of art. In 2013, a British researcher developed, with no little irony, an AI that would paint in a museum according to its mood. Some days it was supposedly so depressed that it kicked visitors out, telling them it wasn’t in the mood to paint.

Future

Berger refers to these three categories throughout Berger on Drawing, but in one of his texts he adds a fourth, exceptional one, which can spring from the other three. When “sufficiently inspired, when they become miraculous, drawings acquire another personal dimension”, regardless of the category to which it belongs. In such cases, the image reflects something that acquires a special dignity, which could be described as “future”.

Whether an AI will be able to generate works that could be classed in this category is a subjective matter. After all, there is usually no consensus on what is good or bad human art either. What does seem clear is that generative AI will continue to stir up debates not only about art and creativity, but also about various other issues such as ethics, misinformation and intellectual property.

What would Berger think of generative AI? In the last few years of his life, the British author also became interested in artificial intelligence and its impact on society. In one of his last essays, entitled DALL·E 2: The New Mimesis, Berger points out that the creation of AI like DALL·E raises fundamental questions about originality in art. If AI is able to create works from verbal instructions, can we consider those works as truly original or simply an imitation of what we ask it to create?

Unfortunately, the entire paragraph above is false. It is a cut-and-paste of the perfectly reasoned but erroneous response of the ChatGPT generative AI to the prompt “write an article about John Berger and DALL·E 2”. As AIs continue to perfect this and other results and we learn to refine the prompts, we will have to keep reading Berger to imagine the answer.

Leave a comment