A DMV employee gives an eye exam, c.1933 | Wikimedia Commons | CC BY

Our minds work on the basis of biases. This helps us to prioritise information and make decisions more quickly. But it also means our judgements are not as rational as they may seem. We analyse how some of these cognitive biases work and the extent to which we can correct them.

When was the last time you changed your mind about something you thought was set in stone? You probably don’t remember, and the fact is that changing our mind runs contrary, in part, to the evolution of our brains. We live and coexist in a complex world that is saturated with information. At every step, at every moment and in every interaction, we make a large number of conscious and unconscious decisions. Uncertainty and complexity are no friends to survival, especially when it comes to making decisions quickly, and heuristics is the method that we have forged over the course of our evolution in order to avoid collapsing through exhaustion.

Long before the explosion of digital media, we already had a problem of scale: while our senses are capable of perceiving 11 million bits per second, our mind can only process 50. Let’s imagine a funnel for filtering all these bits, dedicated exclusively to reducing complexity, ignoring stimuli and prioritising certain pieces of information over others. This is heuristics: the set of mental shortcuts that allow us to function. When we look at it like this, our implicit cognitive biases are the human version of the decision algorithm.

In the early 1970s, psychologists Amos Tversky and Daniel Kahneman published an article in which they questioned the assumptions of rational choice theories and indicated that in situations of uncertainty, it is cognitive biases that predominate. Reaching a rational decision depends on the amount of information available for making a logical choice. However, situations with perfect information are more illusory than feasible, since we can never be certain that we have 100% of the information nor that it is reliable, objective and neutral. What Tversky and Kahneman (who won the Nobel Prize in Economics in 2002) were demonstrating was that even when facts, data and evidence exist, we find it hard to admit that we could be wrong because, in a way, this would call into question our map of everyday shortcuts. This bias is known as “naïve realism”. And as much as we know that the map is not the terrain, it is in fact the representation to which we cling. If heuristics are the mechanism, cognitive biases establish the criteria that lead us to operate through prejudices.

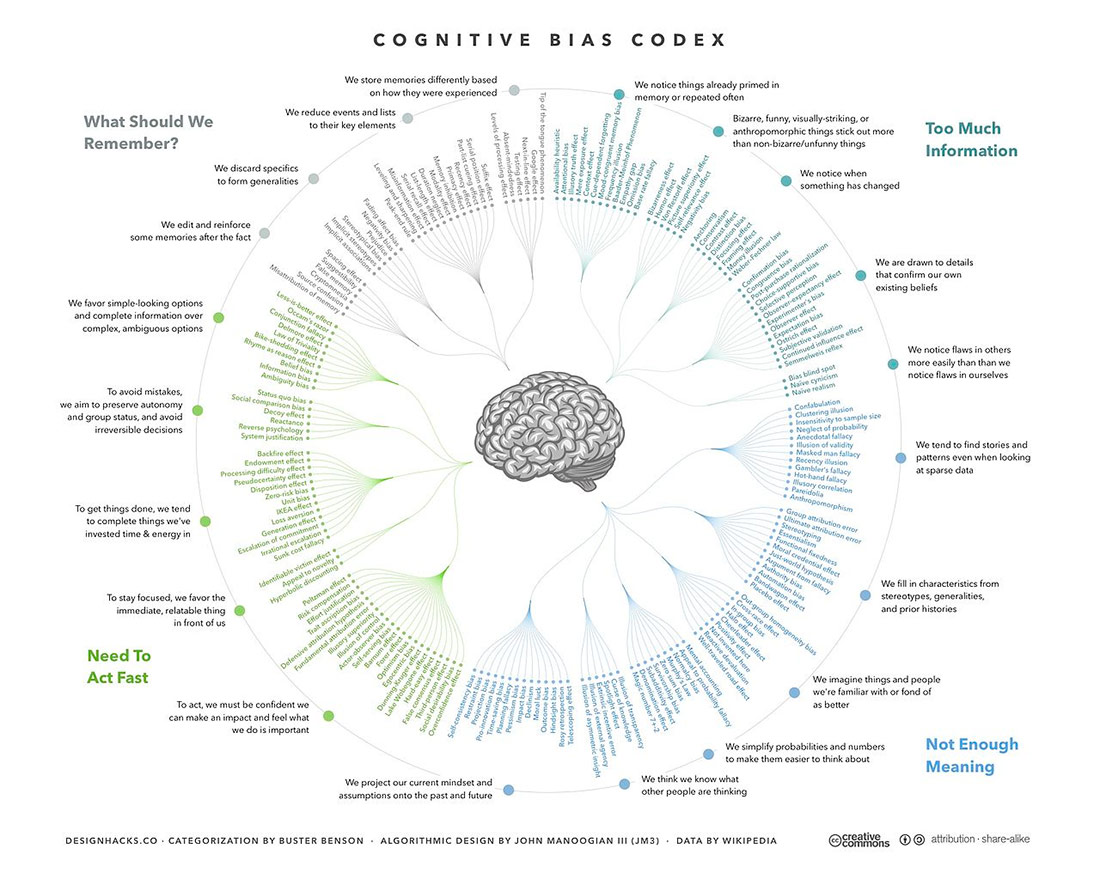

The field of psychology has identified and typified a whole range of cognitive biases. One of the most comprehensive compilations of our cognitive architecture is the Cognitive Bias Codex from 2016, which includes more than 180.

Cognitive Bias Codex | John Manoogian III | CC BY-SA

If you browse through the codex for a while, you will see that the biases are grouped into four spheres. There is a group of biases linked to perception and the need to reduce the complexity of information. The best known is the confirmation bias, which makes us believe in that which reinforces our convictions and doubt whatever contradicts them. We have heard a lot about this bias, especially in relation to the infodemic of fake news that circulated during the first year of the COVID-19 pandemic. Selective perception means you can’t stop seeing people with their arm in a sling on the street when you’ve broken your arm, or with the jacket you really want to buy.

The second block has to do with conferring meaning, finding patterns and creating stories about what we don’t know. This includes everything from authority bias to pareidolia (when we make out shapes in the clouds, for example, endowing vague visual stimuli with meaning). If you trust someone in a white coat more than someone wearing jeans, it is precisely because we associate clothing with expertise or legitimacy. Even our empathy is selective and guided by familiarity: we feel more connection and trust towards those with whom we have something in common (school, neighbourhood, language, hobby, etc.). The implicit message is that if they do the same things as us, they must also be trustworthy. The halo effect makes us associate beauty with goodness, and has been shown to play a role in a lot of selection processes: people who are attractive according to the prevailing canons of beauty are more likely to get a job than someone with the same CV but less sex-appeal.

The fact that heuristics help us make decisions quickly is a sweet tool from a marketing point of view. The fear of scarcity and the aversion to missed opportunities come into play every time you use a search engine to book a room and a message such as “this is the last room available for these dates” pops up. This also goes hand in hand with the bandwagon or social validation effect, when you are told that it is a highly sought-after room and that it has been booked 30 times in the last 24 hours. Who would want to miss out on such a gem? And if the cancellation policy is not too strict, you book straight away almost before you realise it.

Memory and forgetting are part of the human condition, and while we have seen that perception is selective, so too is the choice of what we retain in our memory. And in fact, memories are dynamic – they evolve over time according to the experiences we have and how we revisit them. When you are asked if you have put salt in the stew you are cooking on the hob, the first time round you can answer confidently that you have. The second or third times you will start to doubt, even if you have. A classic study on witnesses and false memory is the one by Elizabeth Loftus. In the experiment, a video was shown of a traffic accident in which two cars collided, before the people who watched the video were asked questions by the study authors. For half of the participants the question was “how fast were the cars going when they hit each other?”, while for the other half, the wording was changed to “when they smashed into each other”. A week later, they were asked again if they had seen broken glass in the footage, and those who had been posed the second form of the question were more likely to say yes (despite the fact that no broken glass could be seen in the video). The humour effect also plays an important role in memory: it is easier to remember things that make us laugh because they are harder for us to process mentally, and they make a greater impression because they tend to stand out.

If you are thinking that none of this happens to you, or that it doesn’t affect you much, this is another textbook bias. I invite you to do a test, this time linked to the prejudices and implicit associations that are activated when we walk past strangers in the street. Depending on their age, physical condition, apparent ethnic origin, clothing and other visible elements, we unconsciously label them. Above all, this helps us to know whether to trust them or cross over to the other side of the street. When you have 10 minutes, log on to Project Implicit and explore your shortcuts. You can choose tests on skin colour, age, body weight, gender, sexual identity, religion, and so on.

Even if you don’t do the tests, just by looking at the list of tests to choose from, you will realise that the fixation on questions related to race, for example, has a lot to do with the fact that they are developed by American researchers. And this is the main limitation of heuristic theories: that there are socio-demographic and cultural biases that shape these shortcuts. The mechanism may be the same, but two people can read the same article and come out with different conclusions, always in support of their own beliefs. We can even get into metatheory and ask ourselves to what extent the 180 biases described in the codex are in themselves confirmations of what the research teams were looking for. And even if we know that the samples of voluntary participants are often made up of students, why couldn’t it just be the cognitive map of very specific groups?

The rise of the digital economy and the proliferation of disinformation have generated an explosion of interest in these theories, as they drive a large part of the research and business models based on decision-making. However, there are individual and social factors that make us more or less susceptible. If we look specifically at disinformation, age is the key factor: people over 65 are the most concerned and the most susceptible. It has a lot to do with level of education and media literacy. Gender has a large impact on the range of topics that attract people’s attention, and some studies point to men being more likely to consume fake news in the area of politics, especially those at the more conservative end of the spectrum. Confirmation bias and existing beliefs play a major role. If we see a headline that reflects our opinion, even if the digital platform warns us that we may be reading fake content, we will trust more in our convictions. And if we are educated to degree level, it will be much harder to make us doubt because our level of self-confidence will be higher. That is why you will never convince anyone by showing them facts and figures. You can’t make a person change one idea for another, like in the film Inception. What usually works is to ask them questions about what their assertions are based on.

In fact, intersectionality is key to understanding how we digest information from the world around us. Being in situations of financial, personal, educational or psychological vulnerability increases susceptibility to disinformation and the tendency to believe in conspiracy theories.

Becoming aware of the cognitive biases that influence our day-to-day lives won’t help us to evade them, but it will allow us to decide whether to act on autopilot or to give more time to reflection. In some ways, this links in with the discussion about the values implicit in algorithms (which, at the end of the day, are still programmed by people with their own cognitive short-sightedness). The difference is that in programming there is room (or this could be worked into the regulations) to decide conscientiously and consensually which biases we incorporate. And in fact, we are so comfortable with biases that in studies with social robots we end up preferring those that are biased. It makes them more acceptable to humans because we perceive the interaction as being more intuitive, pleasant and easy. Maybe that’s why we’re biased and happy, because if ignorance is bliss, then made-to-measure glasses should make life more comfortable for us.

Leave a comment