Fragment of Radiolaria, illustration serie by Ernst Haeckel (1862). Source: Biodiversity Heritage Library. Source: Harvard University.

Big Data is the new medium of the second decade of the twenty-first century: a new set of computing technologies that, like the ones that preceded it, is changing the way in which we access reality. Now that the Social Web has become the new laboratory for cultural production, the Digital Humanities are focusing on analysing the production and distribution of cultural products on a mass scale, in order to participate in designing and questioning the means that have made it possible. As such, their approach has shifted to looking at how culture is produced and distributed, and this brings them up against the challenges of a new connected culture.

5,264,802 text documents, 1,735,435 audio files, 1,403,785 videos, and over two billion web pages that can be accessed through the WayBack Machine make up the inventory of the Internet Archive at the time of writing. Then there are also the works of over 7,500 avant-garde artists archived as videos, pdfs, sound files, and television and radio programmes on UBUWEB, the more than 4,346,267 entries in 241 languages submitted by the 127,156 active users that make up Wikipedia, and the ongoing contributions of more than 500 million users on Twitter. And these are just a few examples of the new virtual spaces where knowledge is stored and shared: open access, collaboratively created digital archives, wikis and social networks in which all types of hybridisations coexist, and where encounters between different types of media and content take place. As a whole, they generate a complex environment that reveals our culture as a constantly evolving process.

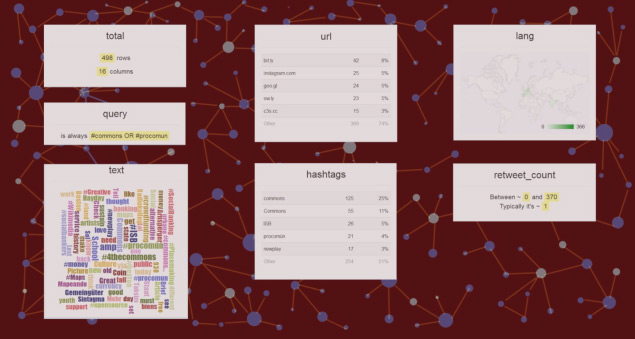

Twitter data visualisation. Generated by Scraperwiki.

In the 1990s, computers were seen as “remediation machines”, or machines that could reproduce existing media, and the digital humanities focused on translating the documents of our cultural heritage, tributaries of print culture, into the digital medium. It was a process that reduced the documents to machine readable and operable discrete data. As Roberto A. Busa explains in the introduction to A Companion to Digital Humanities, humanities computing is the automation of every possible analysis of human expression. This automation enhanced the capabilities of documents, which gradually mutated into performative messages that replaced written documents as the principal carriers of human knowledge. Meanwhile, the processes used to analyse and reproduce the texts were also used to develop tools that would allow users to access and share this content, and this brought about a change from the paradigm of the archive to that of the platform. These twin processes transformed the way research is carried out in the humanities, and determined the content of Digital Humanities 2.0.

Scanning or transcribing documents to convert them to binary code, storing them in data bases, and taking advantage of the fact that this allows users to search and retrieve information, and to add descriptors, tags and metadata, all contributed to shaping a media landscape based on interconnection and interoperability. Examples of projects along these lines include digital archives such as the Salem Witch Trial, directed by Benjamin Ray at the University of Virginia in 2002, which is a repository of documents relating to the Salem witch hunt. Or The Valley of Shadow archive, put together by the Center for Digital History also at the University of Virginia, which documents the lives of the people from Augusta County, Virginia, and Franklin County, Pennsylvania, during the American Civil War. More than archives, these projects become virtual spaces that users can navigate through, actively accessing information through a structure that connects content from different data bases, stored in different formats such as image, text and sound files. The creation of these almost ubiquitously accessible repositories required a collaborative effort that brought together professionals from different disciplines, from historians, linguists and geographers to designers and computer engineers. And the encounter between them led to the convergent practices and post-disciplinary approach that came to be known as Digital Humanities. These collaborative efforts based on the hybridisation of procedures and forms of representation eventually led to the emergence of new formats in which the information can be contextualised, ranging from interactive geographic maps to timelines. An example of these types of new developments is the Digital Roman Forum project, carried out between 1997 and 2003 by the Cultural Virtual Reality Laboratory (CVRLab) at the University of California, Los Angeles (UCLA), which developed a new way of spatializing information. The team created a three-dimensional model of the Roman Forum that became the user interface. It includes a series of cameras, aimed at the different monuments that are reproduced in the project, allowing users to compare the historical reproduction with current images. It also provides details of the different historical documents that refer to these spaces, and that were used to produce the reproduction.

This capacity for access and linking is taken beyond the archive in projects such as Persus and Pelagios, which allow users to freely and collectively access and contribute content. These projects use standards developed in communities of practice to interconnect content through different online resources. They thus become authentic platforms for content sharing and production rather than simple repositories. The digital library Perseus, for example, which was launched in 1985, relies on the creation of open source software that enables an extensible data operation system in a networking space, based on a two-level structure: one that is human-accessible, in which users can add content and tags, and another that incorporates machine-generated knowledge. This platform provides access to the original documents, and links them to many different types of information, such as translations and later reissues, annotated versions, maps of the spaces referred to… and makes it possible to export all of this information in xml format. Meanwhile, the Pelagios project, dedicated to the reconstruction of the Ancient World, is based on the creation of a map that links historical geospatial data to content from other online sources. When users access a point on the map-interface they are taken to a heterogeneous set of information that includes images, translations, quotes, bibliographies and other maps, all of which can be exported in several file formats such as xml, Json, atom and klm.

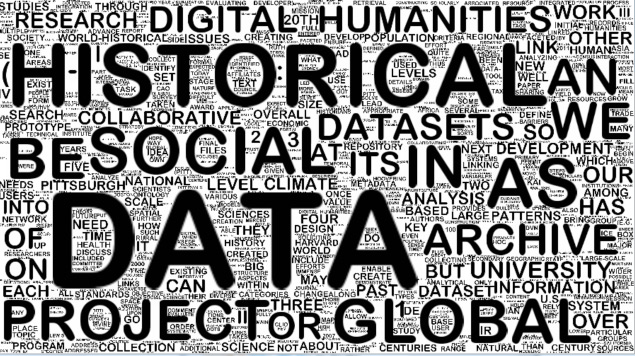

WorldCloud of abstracts of the most recent congress organised by the ALLC: The European Association for Digital Humanities. Generated with Processing.

These projects are examples of the computational turn that David M. Berry theorises in The Computational Turn: Thinking about Digital Humanities: “Computational techniques are not merely an instrument wielded by traditional methods; rather they have profound effects on all aspects of the disciplines. Not only do they introduce new methods, which tend to focus on the identification of novel patterns in the data against the principle of narrative and understanding, they also allow the modularisation and recombination of disciplines within the university itself.” The use of automation in conjunction with digitalisation not only boosts capabilities for analysing text documents, it also creates new capabilities for remixing and producing knowledge, and promotes the emergence of new platforms or public spheres, in which the distribution of information can no longer be considered independently of its production.

In The Digital Humanities Manifesto 2.0 ( written in 2009 by Jeffrey Schnapp and Todd Presner, this computational turn is described as a shift from the initial quantitative impulse of the Digital Humanities to a qualitative, interpretative, emotive and generative focus. One that takes into account the complexity and specificity of the medium, its historical context, its criticism and interpretation. This reformulation of objectives sees digital media as profoundly generative and analyses the digital native discourse and research that have grown out of these emergent public spheres, such as wikis, the blogosphere and digital libraries. It thus allows users to become actively involved in the design of the tools, the software, that have brought about this new form of knowledge production, and in the maintenance of the networks in which this culture is produced. This new type of culture is open source, targeted at many different purposes, and flows through many channels. It stems from process-based collaboration in which knowledge takes on many different forms, from image composition to musical orchestration, the critique of texts and the manufacturing of objects, in a convergence between art, science and the humanities.

The generative and communicational capabilities of new media have led to the production and distribution of cultural products on a mass scale. At this time in history, which Manovich has dubbed the “more media” age, we have to think of culture in terms of data. And not just in terms of data that is stored in digital archives in the usual way, but also that which is produced digitally in the form of metadata, tags, computerised vision, digital fingerprints, statistics, and meta-channels such as blogs and comments on social networks that make reference to other content; data that can be mined and visualised, to quote the most recent work by the same author, «Sofware takes command». Data analysis, data mining, and data visualisation are now being used by scientists, businesses and governments as new ways of generating knowledge, and we can apply the same approach to culture. The methods used in social computing –the analysis and mapping of data produced through our interactions with the environment in order to optimise the range of consumer products or the planning of our cities, for example– could be used to find new patterns in cultural production. These would not only allow us to define new categories, but also to map and monitor how and with what tools this culture is produced. The cultural analysis approach that has been used in the field of Software Studies since 2007 is one possible path in this direction. It consists of developing visualisation tools that allow researchers to analyse cultural products on a mass scale, particularly images. For example, a software programme called ImagePlot and high-resolution screens can be used to carry out projects based on the parameterisation of large sets of images in order to reveal new patterns that challenge the existing categories of cultural analysis. One of these projects, Phototrails, for example, generates visualisations that reveal visual patterns and dynamic structures in photos that are generated and shared by the users of different social networks.

Another approach can be seen in projects that analyse digital traces and monitor knowledge production and distribution processes. An example of this approach is the project History Flow by Martin Wittenberg and Fernanda Viégas –developed at the IBM Collaborative User Experience Research Group–, which generates a histogram of the contributions that make up Wikipedia.

Big Data applied to the field of cultural production allows us to create ongoing spatial representations of how our visual culture is shaped and how knowledge is produced. This brings the Digital Humanities up against the new challenges of a network-generated data culture, challenges that link software analysis to epistemological, pedagogic and political issues and that raise many questions, such as: how data is obtained, what entities should we parameterise, at the risk of failing to include parts of reality in the representations; how we assign value to these data, considering that this has a direct effect on how the data will be visualised, and that the great rhetoric power of these graphic visualisations may potentially distort the data; how information is structured in digital environments, given that the structure itself entails a particular model of knowledge and a particular ideology; how to maintain standards that enable data interoperability, and how to go about the political task of ensuring ongoing free access to this data; what new forms of non-linear, multimedia and collaborative narrative can be developed based on this data; the pedagogical question of how to transmit an understanding of the digital code and algorithmic media to humanists whose education has been based on the division between culture and science; and, lastly, how to bring cultural institutions closer to the laboratory, not just in terms of preservation but also in the participation and maintenance of the networks that make knowledge production possible.

Leave a comment