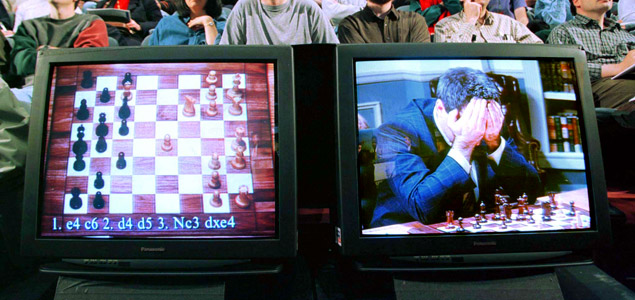

The victory of Deep Blue against Kasparov in 1997.

The angst provoked by good zombie stories (Night of the Living Dead, Dead Set, Dawn of the Dead…) basically arises from the inevitability of the ending. In these stories, the irrevocable, exponential spread of the zombie in the struggle for the largest share of the population pie is virtually always presented as the triumph of stupidity (embodied in the zombie) over intelligence (represented by homo sapiens). The zombie epidemic seems aberrant because it entails the destruction of enlightenment, the end of civilisation, of the distinctive trait that supposedly defines mankind. Even if film zombies would probably be our closest relatives in an alternative taxonomy of mammals. The only difference between them and us is an alteration of the vital signs and a discrepancy in the perceptual/analytical mechanism that we usually call intelligence. The similarity between their physiology and our own is precisely why the concept of the zombie (not the film zombie but a purely hypothetical type) is often used in the philosophy of mind as a starting point for arguments against physicalism – a doctrine holding that everything that exists is purely physical. The fact that philosophical and film zombies don’t live in our immediate surroundings is beside the point: they offer a privileged, up-close starting point for exploring the concepts of intelligence, consciousness and subjectivity. Because in spite of its ubiquity, “intelligence” is actually one of the vaguest terms in our vocabulary and it has many definitions that can adapt to the many possible uses of the word.

One of the most common – and controversial – uses of this word outside of the scope of human activity is artificial intelligence (AI). Almost sixty years after John McCarthy coined the term in 1955, the field of AI still generates so many interpretations that it is difficult to find any common ground between its detractors and advocates, or even between the opposite extremes of the AI community, which spans from Ray Kurzweil’s naïve euphoria to the pessimism of Hubert Dreyfus. Not too long ago, McCarthy himself tried to sum up the framework of the discipline as “the science and engineering of making intelligent machines.” But even the simplest and least ambitious definitions come up against the trap hidden in the title – the term “intelligence”. How can we accurately define a concept that we only understand superficially? How can we avoid falling into what Daniel Dennett calls the “heartbreak of premature definition”? McCarthy’s answer to the preliminary question “what is intelligence?” is “the computational part of the ability to achieve goals.” And though this is just one of dozens of conceivable definitions (there are many of them, formulated in fields such as biology, philosophy and psychology), his answer, like many others, opens the door to considerations that reach far beyond the sphere of homo sapiens.

Plants, microorganisms, collectives

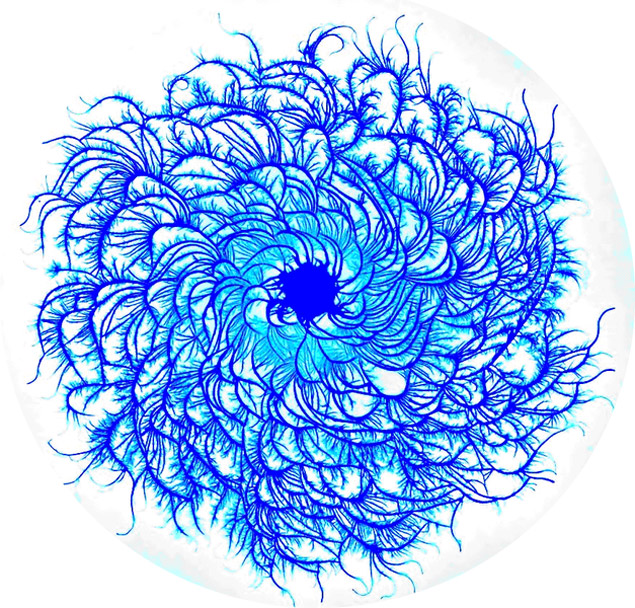

For years, some researchers have been defending the notion of plant intelligence, which Anthony Trewavas (from the University of Edinburgh) describes as “the emergent property that results from the collective interactions between the various tissues of the individual growing plant.”In his fascinating recent book “What a Plant Knows: A Field Guide to the Senses”, another prominent champion of the idea, Daniel Chamovitz (from the University of Tel Aviv), adds: “While we could subjectively define ‘vegetal intelligence’ as another facet of multiple intelligences, such a definition does not further our understanding of either intelligence or plant biology. The question, I posit, should not be whether or not plants are intelligent – it will be ages before we all agree on what that term means; the question should be, “Are plants aware?” and, in fact, they are.” Once again, Chamovitz and Trewavas are defending an idea of awareness that has few similarities to human awareness. Firstly, because it refers to an anatomy that is totally unlike that of mammals. We know that human consciousness has some kind of link to the neural networks in our brains, and while plants don’t have actual neurons, their networks of cells are connected in a similar way. The neocortex in humans – the part of the brain that looks after tasks such as sensory perception, spatial reasoning, motor commands and language – is different to the cell networks of plant organisms. But both are memory systems that create a model of the world in order to formulate predictions and act accordingly. Structural differences aside, if we accept that one of the essential conditions of what we call intelligent behaviour is the ability to predict the future – identifying patterns in the environment in order to adapt to them – then plants and even very primitive bacteria easily fit into the category, as argued in the late 1990s by Roger Bingham and Peggy La Cerra. More recent studies in the field of microbiology have started to talk about bacterial intelligence, pointing out the communication and organisational abilities of “social” bacteria such as Paenibacillus Dendritiformis.

Paenibacillus dendritiformis.

Jeff Hawkins, founder of the Redwood Center for Theoretical Neuroscience (and also of Palm Computing, for those nostalgic about consumer technology) claims that a detailed observation of the physiology of the neocortex would allow us to pinpoint a short list of the characteristics and conditions that are essential for intelligence. And that we will then be able to extrapolate them to any system, which does not necessarily have to be human. Or even necessarily biological. Hawkins is not the only scientist who argues that some of the attributes of intelligent behaviour that we have considered to be sine qua non conditions for intelligent behaviour for hundreds of years – such as language – would actually not be on this basic list of essential characteristics. In 2001, the electro-acoustic composer, activist and environmental researcher David Dunn took Wittgenstein’s famous quote “if a lion could speak, we could not understand him”, as the starting point for reflections published in “Nature, Sound Art and the Sacred” on the sensocentric supremacy that humans have traditionally imposed on the non-human world. Dunn argued that the problem can partly be traced back to the assumption that since animals do not possess language like humans do, they are simply organic machines to be ruthlessly exploited. “New evidence suggests that thinking does not require language in human terms and that each form of life may have its own way of being self-aware. Life and cognition might be considered to be synonymous even at the cellular level.” Since Classical Greece, humans have used the concept of the rational animal to arbitrarily separate homo economicus from the rest of the animal kingdom, even though in a literal sense rationality simply means the ability to make optimal decisions in order to achieve objectives. And this, once again, applies to most known life forms.

Other species on our planet may not have the skills required to contemplate the infinity of the universe or to invent a jet engine, but we shouldn’t forget that mankind achieved these milestones through the accumulated intelligence of the species rather than one by one, with our own individual perceptual/analytical abilities. Networks of individuals rather than neurons. Thomas W. Malone, Director and founder of the MIT Center for Collective Intelligence, dedicates much of his teaching and research to the study of collective intelligence, which he broadly describes as “groups of individuals acting collectively in ways that seem intelligent” – a way of looking at behaviour that functions as a human/non-human two-way mirror. Malone says: “groups of ants can very usefully be viewed as intelligent probably more than the individual ants themselves. It’s clearly possible to view groups of humans and their artefacts, their computational and other artefacts, as intelligent collectively as well. That perspective raises not only deep and interesting scientific questions, but also raises what you might think of as even philosophical questions about what we humans are as groups, not just as individuals.” Similarly, but from a neutral ground that straddles computing, neuroscience and philosophy, the mathematician and AI maverick Marvin Minsky has been defending what he calls the theory of The Society of Mind for many years. This theory, which is reminiscent of the ideas of Bruno Latour, explains the minds of humans and other natural cognitive systems as an enormous society made up of individual processes known as “agents” that come together to form the mind.

The xenomorph and the post-human

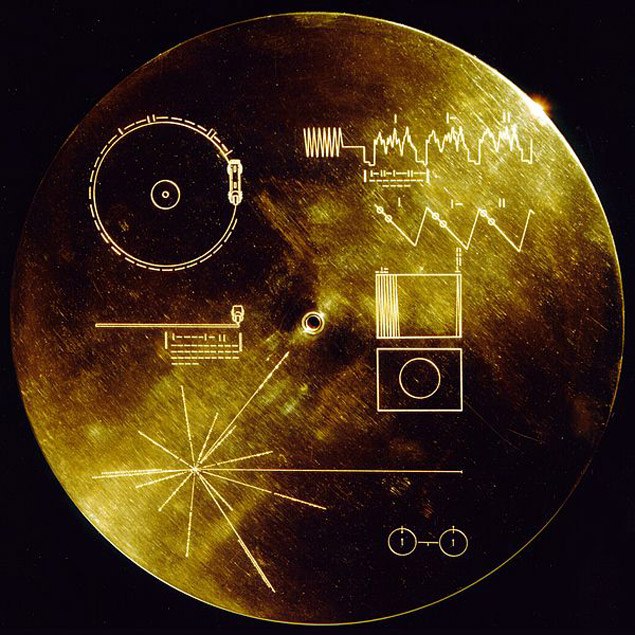

But the rampant anthropocentrism that goes back to time immemorial and has dictated the notions of intelligence that still prevail in Western culture today go beyond life on planet Earth. Many attempts to find intelligent life in outer space, for example, are based on the goal of finding extraterrestrial beings, species and civilisations that are similar to homo sapiens. Few human artefacts are better able to illustrate this mix of arrogance and stupidity than the Voyager Golden Record that was launched into space in 1977. The record, selected by a committee chaired by Carl Sagan, consists of a selection of images and sounds that were intended to show extraterrestrial life forms the biodiversity and cultural mix of planet Earth. Fortunately, it is accompanied by a message from Jimmy Carter that explains the good intentions behind the project and offers detailed playback instructions, including the correct time of one rotation (in binary arithmetic), expressed in time units of 0.70 billionths of a second (the period of time associated with the fundamental transition of the hydrogen atom). So, even if we leave aside the fact that Voyager 1 will take around 40,000 years to be relatively close (within 1.6 light years) to the star Gliese 445, the project assumes that the hypothetical extraterrestrial life form will:

- have audio and visual sensory devices that are at least remotely similar to our own, so that they can listen to and see the frequencies encoded on the record.

- have some kind of prehensile system that will allow them to place the needle in the groove.

- understand basic English (to decipher Carter’s message).

- be a civilisation with expertise in chemistry, particularly in the study of hydrogen.

- and in binary arithmetic.

Voyager Golden Record.

It seems outrageous to assume that all other intelligent life forms in the Universe are similar to ourselves rather than to dolphins (thanks as always, Douglas Adams), spores, or gas clouds. We shouldn’t be surprised if the “xenomorph” (to borrow a science-fiction term with strong speciesist connotations) turns out to be brutally different to any known creature, or if it fails to follow familiar concepts such as bilateral symmetry. The only crew member that appreciates the “perfection” of the xenomorph in Alien (1979) is Ash, the android on the Nostromo… In reality, we don’t need to leave our own planet to encounter organisms that challenge our stereotypical ideas about anatomy and life cycles. Slime moulds, for example, spend their lives as single cell entities unless food is scarce, and then they come together to form a pseudoplasmodium made up of many different individuals. The studies carried out over the past few decades on the ability of these organisms without a brain to find their way around and on their «memory» are particularly interesting in relation to the debate on intelligence.

Slime moulds.

In his 2010 documentary Into Eternity, Michael Madsen takes a much more interesting approach to the issue of post-human contact than the Voyager’s Golden Record. Faced with the need to store nuclear waste in an underground base in Finland, the people in charge of the repository try to work out how they can warn future generations and future civilisations, both human and non human, of the danger that will remain present even thousands of years later. An impressive lesson in collective psychology that tries to push mental speculation to the limit of human thought, in order to anticipate – unsuccessfully – possible post-human interpretations of the message. Madsen’s film implies that we cannot predict how a post-human civilisation would interpret the warnings, just as we cannot participate in the subjective experience of other species on our planet. The core idea of Thomas Nagel’s famous article “What is it Like to be a Bat?” (1974), the fact that we can never escape our first-person experience, is still relevant to many reflections on “the hard problem of consciousness”. But in spite of this impossibility, we don’t hesitate to put ourselves in the shoes of other organisms and to limit the boundaries of what we consider to be conscious, rational, intelligent behaviour. Given this insurmountable obstacle, perhaps we should blur these boundaries altogether, seeing as we will never know what it is like to be a bat, a spore or a black hole, or what they feel. It’s perfectly possible that bats, spores and black holes do not have conscious experience of any kind, but we will never find out for sure. The carbocentrism derived from the different versions of the anthropic principle remains deeply entrenched in our subconscious, and that of the scientific community. For now, the only life forms that we know are carbon-based molecular systems, but it seems a bit arrogant to categorically deny other possibilities.

From Turing to Singularity: Artificial intelligence

Almost from the outset, the road map for artificial intelligence has been stigmatised by ideas that are relatively out of touch with reality, and that define what we have come to expect from intelligent machines. The famous test proposed by Alan Turing in 1959 is one of these poisoned chalices, although this was due to later interpretations rather than to Turing’s original intentions, given that he repeatedly expressed his total lack of interest in the debate about whether or not AI was possible. For Turing, the idea of judging the intelligence of a machine according to its ability to emulate human behaviour was a simple phenetic criteria and not a universal yardstick. Nonetheless, his famous inductive inference test has had a profound influence on society’s perception of AI. While the various branches of scientific research don’t seem to concerned about passing the Turing Test, a considerable number of AI projects are still based on emulation. In “Human-Machine Reconfigurations”, as a kind of pseudo-scientific remix of the creationist, Pygmalionist drive that is so firmly rooted in Western culture (Golem, Pinocchio, Frankenstein, C-3PO), Lucy Suchman lists the three components that she believes are indispensable in order to guarantee the “human” nature of any artificial intelligence project: embodiment, emotion and sociability. Fortunately, most AI research seems to be more interested in exploring the actual potential of machine intelligence, to generate models that can help to improve our understanding of cognition. One of the advocates of behaviour-based robotics, Belgian scientist Luc Steels, explains: “there are two basic motivations for work in AI. The first motivation is purely pragmatic. Many researchers try to find useful algorithms – such as for data mining, visual image processing, and situation awareness – that have a wide range of applications. (…) The second motivation is scientific. Computers and robots are used as experimental platforms for investigating issues about intelligence. (…) Most people in the field have known for a long time that mimicking exclusively human intelligence is not a good path for progress, neither from the viewpoint of building practical applications nor from the viewpoint of progressing in the scientific understanding of intelligence. Just like some biologists learn more about life from studying a simple E.coli bacteria, there is a great value in studying aspects of intelligence in much simpler forms.” Even so, AI continues to come up against what scientists ironically call “the AI effect”: the tendency to discredit any new achievements with the excuse that they are not based on “real” intelligent behaviour, but on software that somehow simulates it. Not even the media-friendly milestones of recent years (the victory of Deep Blue against Kasparov in 1997, Watson getting the upper hand over the former absolute champions of the quiz show Jeopardy! in 2011) have not been able to diminish the constant denial. Australian scientist Rodney Brooks, one of the pioneers of robotics who began working in the field in the 1980s, mentioned the AI effect in an interview published in Wired magazine in 2002, saying that his colleagues often joked that “AI” actually stands for “almost implemented”.

Pushing the boundaries

Mankind’s refusal to accept other types of intelligence is basically rooted in the fear of losing our privileged status in the known Universe – it’s no coincidence that we have chosen to designate ourselves as the doubly narcissistic “home sapiens sapiens”. Breaking the exclusivity of the contract that binds intelligence to humans is hard to swallow. But the confusion also lies in the simple fact that many of the theories mentioned here use traditionally “human” terminology (consciousness, the mind, thought, etc.) to describe the properties of non-human systems. Drew McDermott has even suggested that we should adopt the term “cognitive computing” instead of “artificial intelligence”. In any case, the anthropocentrism that inevitably shapes our experience is totally at odds with radical notions such as the supposed predictive modelling that certain microorganisms engage in, or Irvin John Good’s theory of Singularity and his bold 1965 prediction of the arrival of the first ultraintelligent machine. Unable to surmount this obstacle, mankind clings to species chauvinism and hides behind the real difficulty that it contains, such as “the problem of other minds”, one of the classic and insuperable challenges of epistemology, which asks: given that I can only observe the behaviour of others, how can I know that others have minds? The question works at the level of metaphor (as in philosophical zombies) and practice (AI), and is an enormous challenge when it comes to admitting the validity of other forms of intelligence. When it comes to accepting what Ian Bogost calls alien phenomenology, or the possibility of what Kristian Bjørn Vester (Goodiepal) describes as alternative intelligences (ALI), to mention my two current favorite examples.

If we are ever to manage to recognise the multiplicity of intelligences, we will have to stop analysing them through our own reflection, and disregard notions with strong speciesist overtones such as “mind”, which may have less to do with the matter than we have assumed up until now. In a recent article published in the Physical Review Letters, Alex Wissner-Gross (MIT) and Cameron Freer (University of Hawaii) suggest a possible first step towards explaining the link between intelligence and the maximisation of entropy, which, they say, provokes the spontaneous emergence of two typically human behaviours (the use of tools and social cooperation) in simple physical systems. And here we are not talking about living organisms such as plants, or decentralised termite colonies, or smart mammals such as dolphins, or computers with billion-dollar budgets like Watson. We’re talking about pendulums.

Saty Raghavachary | 05 June 2021

Hi Roc, LOVE your article on MULTIPLICITY…

I pondered this as well, and came up with this definition of intelligence: ‘considered response’ – it covers all you mention and more – slime molds, ant colonies, plants, viruses, humans… 🙂

Here is my writeup: https://www.researchgate.net/publication/346786737_Intelligence_-_Consider_This_and_Respond

Leave a comment