Pierre Lévy is a philosopher and a pioneer in the study of the impact of the Internet on human knowledge and culture. In Collective Intelligence. Mankind’s Emerging World in Cyberspace, published in French in 1994 (English translation in 1999), he describes a kind of collective intelligence that extends everywhere and is constantly evaluated and coordinated in real time, a collective human intelligence, augmented by new information technologies and the Internet. Since then, he has been working on a major undertaking: the creation of IEML (Information Economy Meta Language), a tool for the augmentation of collective intelligence by means of the algorithmic medium. IEML, which already has its own grammar, is a metalanguage that includes the semantic dimension, making it computable. This in turn allows a reflexive representation of collective intelligence processes.

In the book Semantic Sphere I. Computation, Cognition, and Information Economy, Pierre Lévy describes IEML as a new tool that works with the ocean of data of participatory digital memory, which is common to all humanity, and systematically turns it into knowledge.A system for encoding meaning that adds transparency, interoperability and computability to the operations that take place in digital memory.

By formalising meaning, this metalanguage adds a human dimension to the analysis and exploitation of the data deluge that is the backdrop of our lives in the digital society.And it also offers a new standard for the human sciences with the potential to accommodate maximum diversity and interoperability.

In “The Technologies of Intelligence” and “Collective Intelligence”, you argue that the Internet and related media are new intelligence technologies that augment the intellectual processes of human beings. And that they create a new space of collaboratively produced, dynamic, quantitative knowledge. What are the characteristics of this augmented collective intelligence?

The first thing to understand is that collective intelligence already exists. It is not something that has to be built. Collective intelligence exists at the level of animal societies: it exists in all animal societies, especially insect societies and mammal societies, and of course the human species is a marvellous example of collective intelligence. In addition to the means of communication used by animals, human beings also use language, technology, complex social institutions and so on, which, taken together, create culture. Bees have collective intelligence but without this cultural dimension. In addition, human beings have personal reflexive intelligence that augments the capacity of global collective intelligence. This is not true for animals but only for humans.

Now the point is to augment human collective intelligence. The main way to achieve this is by means of media and symbolic systems. Human collective intelligence is based on language and technology and we can act on these in order to augment it. The first leap forward in the augmentation of human collective intelligence was the invention of writing. Then we invented more complex, subtle and efficient media like paper, the alphabet and positional systems to represent numbers using ten numerals including zero. All of these things led to a considerable increase in collective intelligence. Then there was the invention of the printing press and electronic media. Now we are in a new stage of the augmentation of human collective intelligence: the digital or – as I call it – algorithmic stage. Our new technical structure has given us ubiquitous communication, interconnection of information, and – most importantly – automata that are able to transform symbols. With these three elements we have an extraordinary opportunity to augment human collective intelligence.

You have suggested that there are three stages in the progress of the algorithmic medium prior to the semantic sphere: the addressing of information in the memory of computers (operating systems), the addressing of computers on the Internet, and finally the Web – the addressing of all data within a global network, where all information can be considered to be part of an interconnected whole–. This externalisation of the collective human memory and intellectual processes has increased individual autonomy and the self-organisation of human communities. How has this led to a global, hypermediated public sphere and to the democratisation of knowledge?

This democratisation of knowledge is already happening. If you have ubiquitous communication, it means that you have access to any kind of information almost for free: the best example is Wikipedia. We can also speak about blogs, social media, and the growing open data movement. When you have access to all this information, when you can participate in social networks that support collaborative learning, and when you have algorithms at your fingertips that can help you to do a lot of things, there is a genuine augmentation of collective human intelligence, an augmentation that implies the democratisation of knowledge.

What role do cultural institutions play in this democratisation of knowledge?

Cultural Institutions are publishing data in an open way; they are participating in broad conversations on social media, taking advantage of the possibilities of crowdsourcing, and so on. They also have the opportunity to grow an open, bottom-up knowledge management strategy.

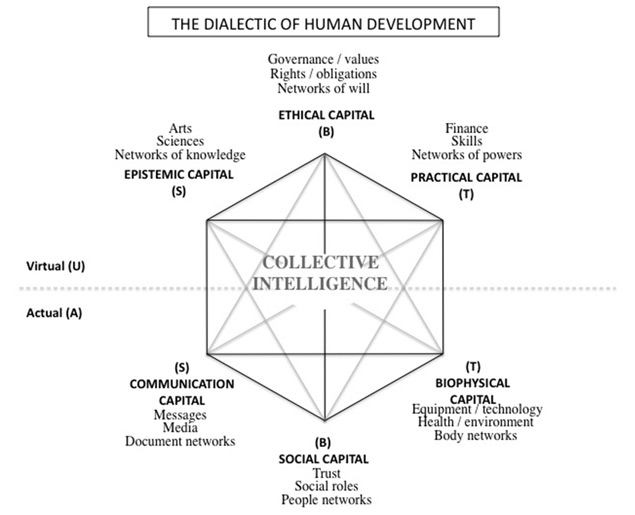

A Model of Collective Intelligence in the Service of Human Development (Pierre Lévy, en The Semantic Sphere, 2011) S = sign, B = being, T = thing.

We are now in the midst of what the media have branded the ‘big data’ phenomenon. Our species is producing and storing data in volumes that surpass our powers of perception and analysis. How is this phenomenon connected to the algorithmic medium?

First let’s say that what is happening now, the availability of big flows of data, is just an actualisation of the Internet’s potential. It was always there. It is just that we now have more data and more people are able to get this data and analyse it. There has been a huge increase in the amount of information generated in the period from the second half of the twentieth century to the beginning of the twenty-first century. At the beginning only a few people used the Internet and now almost the half of human population is connected.

At first the Internet was a way to send and receive messages. We were happy because we could send messages to the whole planet and receive messages from the entire planet. But the biggest potential of the algorithmic medium is not the transmission of information: it is the automatic transformation of data (through software).

We could say that the big data available on the Internet is currently analysed, transformed and exploited by big governments, big scientific laboratories and big corporations. That’s what we call big data today. In the future there will be a democratisation of the processing of big data. It will be a new revolution. If you think about the situation of computers in the early days, only big companies, big governments and big laboratories had access to computing power. But nowadays we have the revolution of social computing and decentralized communication by means of the Internet. I look forward to the same kind of revolution regarding the processing and analysis of big data.

Communications giants like Google and Facebook are promoting the use of artificial intelligence to exploit and analyse data. This means that logic and computing tend to prevail in the way we understand reality. IEML, however, incorporates the semantic dimension. How will this new model be able to describe they way we create and transform meaning, and make it computable?

Today we have something called the “semantic web”, but it is not semantic at all! It is based on logical links between data and on algebraic models of logic. There is no model of semantics there. So in fact there is currently no model that sets out to automate the creation of semantic links in a general and universal way. IEML will enable the simulation of ecosystems of ideas based on people’s activities, and it will reflect collective intelligence. This will completely change the meaning of “big data” because we will be able to transform this data into knowledge.

We have very powerful tools at our disposal, we have enormous, almost unlimited computing power, and we have a medium were the communication is ubiquitous. You can communicate everywhere, all the time, and all documents are interconnected. Now the question is: how will we use all these tools in a meaningful way to augment human collective intelligence?

This is why I have invented a language that automatically computes internal semantic relations. When you write a sentence in IEML it automatically creates the semantic network between the words in the sentence, and shows the semantic networks between the words in the dictionary. When you write a text in IEML, it creates the semantic relations between the different sentences that make up the text. Moreover, when you select a text, IEML automatically creates the semantic relations between this text and the other texts in a library. So you have a kind of automatic semantic hypertextualisation. The IEML code programs semantic networks and it can easily be manipulated by algorithms (it is a “regular language”). Plus, IEML self-translates automatically into natural languages, so that users will not be obliged to learn this code.

The most important thing is that if you categorize data in IEML it will automatically create a network of semantic relations between the data. You can have automatically-generated semantic relations inside any kind of data set. This is the point that connects IEML and Big Data.

So IEML provides a system of computable metadata that makes it possible to automate semantic relationships. Do you think it could become a new common language for human sciences and contribute to their renewal and future development?

Everyone will be able to categorise data however they want. Any discipline, any culture, any theory will be able to categorise data in its own way, to allow diversity, using a single metalanguage, to ensure interoperability. This will automatically generate ecosystems of ideas that will be navigable with all their semantic relations. You will be able to compare different ecosystems of ideas according to their data and the different ways of categorising them. You will be able to chose different perspectives and approaches. For example, the same people interpreting different sets of data, or different people interpreting the same set of data. IEML ensures the interoperability of all ecosystem of ideas. On one hand you have the greatest possibility of diversity, and on the other you have computability and semantic interoperability. I think that it will be a big improvement for the human sciences because today the human sciences can use statistics, but it is a purely quantitative method. They can also use automatic reasoning, but it is a purely logical method. But with IEML we can compute using semantic relations, and it is only through semantics (in conjunction with logic and statistics) that we can understand what is happening in the human realm. We will be able to analyse and manipulate meaning, and there lies the essence of the human sciences.

Let’s talk about the current stage of development of IEML: I know it’s early days, but can you outline some of the applications or tools that may be developed with this metalanguage?

Is still too early; perhaps the first application may be a kind of collective intelligence game in which people will work together to build the best ecosystem of ideas for their own goals.

I published The Semantic Sphere in 2011. And I finished the grammar that has all the mathematical and algorithmic dimensions six months ago. I am writing a second book entitled Algorithmic Intelligence, where I explain all these things about reflexivity and intelligence. The IEML dictionary will be published (online) in the coming months. It will be the first kernel, because the dictionary has to be augmented progressively, and not just by me. I hope other people will contribute.

This IEML interlinguistic dictionary ensures that semantic networks can be translated from one natural language to another. Could you explain how it works, and how it incorporates the complexity and pragmatics of natural languages?

The basis of IEML is a simple commutative algebra (a regular language) that makes it computable. A special coding of the algebra (called Script) allows for recursivity, self-referential processes and the programming of rhizomatic graphs. The algorithmic grammar transforms the code into fractally complex networks that represent the semantic structure of texts. The dictionary, made up of terms organized according to symmetric systems of relations (paradigms), gives content to the rhizomatic graphs and creates a kind of common coordinate system of ideas. Working together, the Script, the algorithmic grammar and the dictionary create a symmetric correspondence between individual algebraic operations and different semantic networks (expressed in natural languages). The semantic sphere brings together all possible texts in the language, translated into natural languages, including the semantic relations between all the texts. On the playing field of the semantic sphere, dialogue, intersubjectivity and pragmatic complexity arise, and open games allow free regulation of the categorisation and the evaluation of data. Ultimately, all kinds of ecosystems of ideas – representing collective cognitive processes – will be cultivated in an interoperable environment.

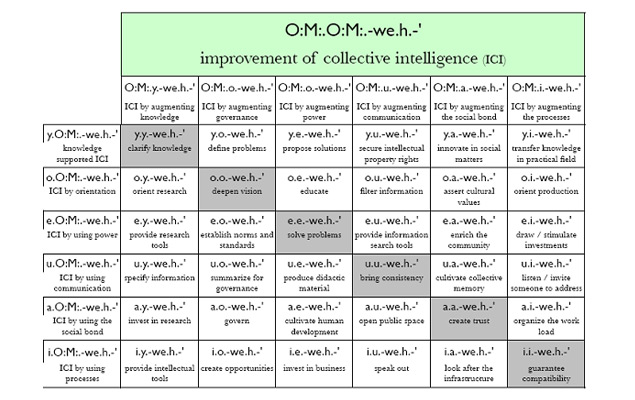

Schema from the START – IEML / English Dictionary by Prof. Pierre Lévy FRSC CRC University of Ottawa 25th August 2010 (Copyright Pierre Lévy 2010 (license Apache 2.0).

Since IEML automatically creates very complex graphs of semantic relations, one of the development tasks that is still pending is to transform these complex graphs into visualisations that make them usable and navigable.

How do you envisage these big graphs? Can you give us an idea of what the visualisation could look like?

The idea is to project these very complex graphs onto a 3D interactive structure. These could be spheres, for example, so you will be able to go inside the sphere corresponding to one particular idea and you will have all the other ideas of its ecosystem around you, arranged according to the different semantic relations. You will be also able to manipulate the spheres from the outside and look at them as if they were on a geographical map. And you will be able to zoom in and zoom out of fractal levels of complexity. Ecosystems of ideas will be displayed as interactive holograms in virtual reality on the Web (through tablets) and as augmented reality experienced in the 3D physical world (through Google glasses, for example).

I’m also curious about your thoughts on the social alarm generated by the Internet’s enormous capacity to retrieve data, and the potential exploitation of this data. There are social concerns about possible abuses and privacy infringement. Some big companies are starting to consider drafting codes of ethics to regulate and prevent the abuse of data. Do you think a fixed set of rules can effectively regulate the changing environment of the algorithmic medium? How can IEML contribute to improving the transparency and regulation of this medium?

IEML does not only allow transparency, it allows symmetrical transparency. Everybody participating in the semantic sphere will be transparent to others, but all the others will also be transparent to him or her. The problem with hyper-surveillance is that transparency is currently not symmetrical. What I mean is that ordinary people are transparent to big governments and big companies, but these big companies and big governments are not transparent to ordinary people. There is no symmetry. Power differences between big governments and little governments or between big companies and individuals will probably continue to exist. But we can create a new public space where this asymmetry is suspended, and where powerful players are treated exactly like ordinary players.

And to finish up, last month the CCCB Lab held began a series of workshops related to the Internet Universe project, which explore the issue of education in the digital environment. As you have published numerous works on this subject, could you summarise a few key points in regard to educating ‘digital natives’ about responsibility and participation in the algorithmic medium?

People have to accept their personal and collective responsibility. Because every time we create a link, every time we “like” something, every time we create a hashtag, every time we buy a book on Amazon, and so on, we transform the relational structure of the common memory. So we have a great deal of responsibility for what happens online. Whatever is happening is the result of what all the people are doing together; the Internet is an expression of human collective intelligence.

Therefore, we also have to develop critical thinking. Everything that you find on the Internet is the expression of particular points of view, that are neither neutral nor objective, but an expression of active subjectivities. Where does the money come from? Where do the ideas come from? What is the author’s pragmatic context? And so on. The more we know the answers to these questions, the greater the transparency of the source… and the more it can be trusted. This notion of making the source of information transparent is very close to the scientific mindset. Because scientific knowledge has to be able to answer questions such as: Where did the data come from? Where does the theory come from? Where do the grants come from? Transparency is the new objectivity.

Marta Maria Gonçalves de Oliveira | 22 June 2016

Fantástico essa pesquisa desse dicionário. Estou relacionando com um aplicativo de uma estratégia multissessorial Son e Gestos para alunos com deficit cognitivo ,deficientes visuais ,Dislexia para que através do aplicativo possam ser alfabetizados.Então como registrar essa linguagem meta linguística nessa estratégia para contribuir com essa pesquisa?Estamos ainda em construção do aplicativo.E saber a simbologia contribuindo para uma inteligência coletiva é muito motivante

El flujo del conocimiento como elemento dinámico dentro de un aprendizaje continuado y en voz alta – juandon. Innovación y conocimiento | 30 March 2022

[…] como algo natural en internet y de como de manera SEMÁNTICA, (Coincidiendo con el post de Pierre Levy: EML: A Project for a New Humanism. An interview with Pierre Lévy me pregunto ¿Cómo será el nuevo modelo y como será capaz de describir que nuestra forma de crear y […]

Leave a comment