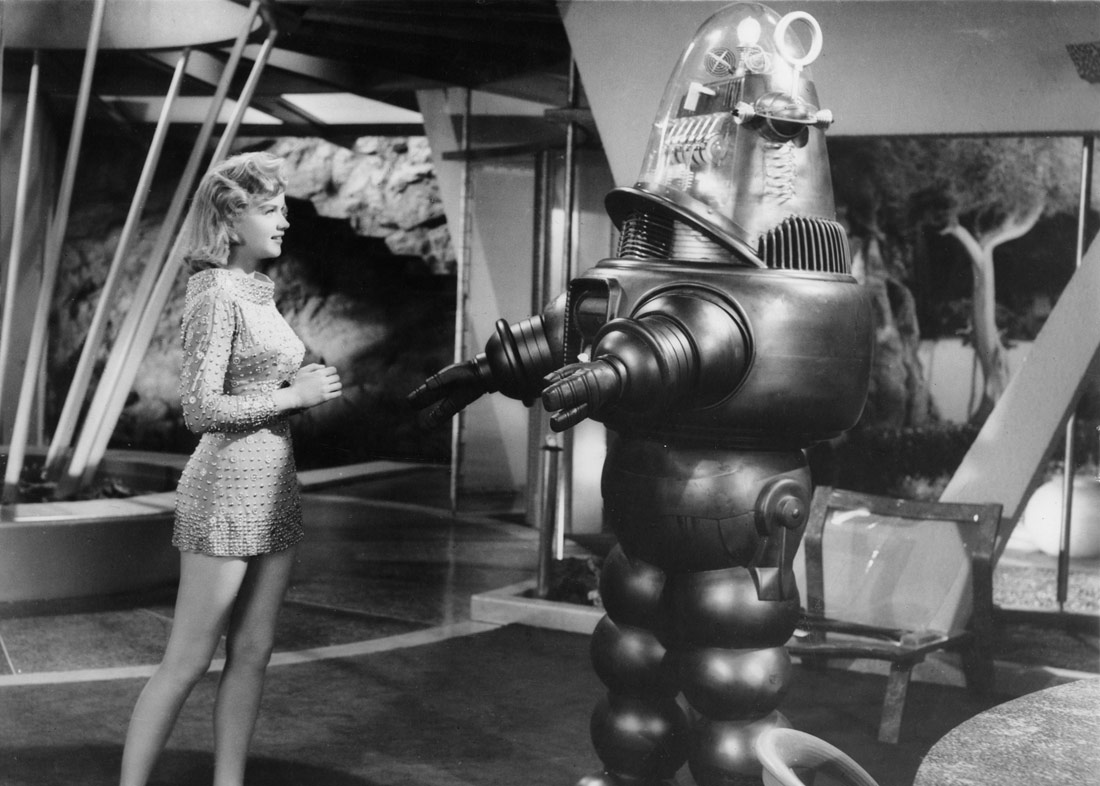

Forbidden Planet, 1959 | Digitalt Museum. Tekniska museet | Public Domain

We find ourselves heading towards a crucial singularity in the history of humanity. According to this theory, accelerated technological progress is bringing us closer to a point of no return, in which computational intelligence will reach such a level of complexity that it will be capable of improving itself. Once technology enters into this dynamic of self-learning, its dependence on humans will be overcome, opening up a field of autonomy and efficiency that will inevitably lead towards unprecedented progress of artificial intelligence. We are publishing, courtesy of Ariel, an advance excerpt from the new book by José Ignacio Latorre, Ética para máquinas, in which he proposes a reflection on the ethics necessary for the new society that is approaching.

Human affairs, as we know them, could not continue.

John von Neumann

Outsmarted

John von Neumann was perhaps one of the first people to glimpse the huge potential for calculation that a computer could have. After his death, his colleague Stanisław Ulam attributed these words to him from a conversation they once held:

The ever-accelerating progress of technology and changes in the mode of human life […] gives the appearance of approaching some essential singularity in the history of the race.

A lucid reflection, attributed to von Neumann in 1950. Later, in 1993, Vernor Vinge wrote an article titled “The Coming Technological Singularity” in which he suggested that the human mind would be surpassed by machines equipped with artificial intelligence. This idea was taken up with unprecedented vigour by Ray Kurzweil in his book The Singularity Is Near: When Humans Transcend Biology (2005). The title is self-explanatory. Humans, according to Kurzweil, will leave their biological bodies behind and their intelligence will be transferred to machines. The idea of the Singularity also led to the emergence of the so-called Singularity University, an institution founded in 2008 and created by a series of relevant figures in the world of advanced technology. Its slogan is unequivocal: “Preparing humanity for accelerating technological change”.

The essential argument associated with the Singularity is based on an overwhelming logic: If we build artificial intelligences that are increasingly more powerful and autonomous, a point will come when an algorithm is capable of self-improvement. Having improved, the new algorithm will be even more powerful and, in consequence, capable of improving itself once more. It is a chain that keeps feeding itself. Each artificial intelligence will design the next which will be even better than it is itself. This iterative process will continue advancing unstoppably towards an intelligence of brutal proportions. We will have reached the Singularity.

We can try to develop a certain intuition of how we will reach the Singularity. Let’s imagine that artificial intelligence advances thanks to a better understanding of the sophisticated architecture of a giant neuronal network. The problem of designing artificial neuronal circuits involves an extreme difficulty. Initially we are using models controlled by humans to explore better neuronal architectures. But the time is arriving in which the exploration of new architectures is itself designed by an improved artificial neuronal network. It will be able to analyse itself and improve itself. It will be like a good student who starts learning on his own and ultimately overtakes his own teachers.

Let’s look at this last example seriously. A good student, a brilliant teenager, starts learning mathematics under fine teachers. They teach him how to learn. The student’s intellect is greater than that of his secondary-school teachers. He goes on to university. There, his mind continues improving. He leaves behind the wisest of the professors. He visits the top universities on the planet. He works extremely hard and continues to leave behind the best researchers of his time. He’s alone now. He continues to advance by fuelling his knowledge himself. He starts learning on his own. He intuits, he establishes his lines of research. He is becoming better and better.

An advanced artificial intelligence would operate in the same way as a very good student. But, let’s not forget, a machine does not suffer from the limits imposed by biology. Integrated circuits, quantum computers and future developments that we cannot even envision today will take the progress of artificial intelligence to dizzying heights.

It is important to emphasise the crucial point of the change of substrate between human intelligence and artificial intelligence. The Singularity requires an overcoming of the limits imposed by nervous tissue, glial cells, nutrients that arrive through the blood, the small size of the brain, the death of its parts. An artificial intelligence based on silicon substrates has the capacity to grow far beyond the human brain and to not perish.

Can you imagine a smart and non-perishable machine that creates itself? What size would it have? Would it cover the earth? Would it be single or scattered? Would it be violent? Would it mean the end for humans?

Single higher intelligence

Let’s imagine we have reached the Singularity and have created an intelligence vastly superior to our own. Would one single advanced artificial intelligence exist, or would there be several?

We can defend both possibilities.

Let’s start by arguing that if the Singularity is reached, the higher intelligence created will be single. A supreme intelligence should be peaceful, not at all hostile, given that it would find no benefit in violence. The artificial intelligence could sustain itself effortlessly, with no obstacles to its growth. It could provide itself with everything it needs in an efficient way. It will be a magnificent manager of its own physical substrate. If there were another artificial intelligence, the two would opt to unite and generate an even more powerful intelligence. We humans do so when we become involved in higher tasks such as the study of pure science. Mathematicians, physicists, biologists, indeed all scientists who seek basic knowledge collaborate with each other. It is true that the academic system is created to encourage competition. But it is even more true that all people who approach difficult scientific problems know that these will overwhelm them individually and that they will only advance when they join forces. So, if one higher intelligence enters into relations with another, the two will join forces.

An example exists from the world of economics that illustrates the creation of a single all-powerful entity. Let’s take the simplified example of business. One way of modelling an economic transaction between two people is as follows. Both put a sum of money into a common kitty. A draw is held and one takes away all the money while the other loses everything. Clearly this way of simulating a transaction is far removed from reality. But what is true is that in many transactions, one party wins and the other loses. The idea here is to exaggerate this situation and see what consequences it has. To confirm the result, we can create a program where cellular automata operate according to this rule. The algorithm will create virtual entities that randomly associate, they conduct a transaction for a certain amount of money, somebody wins, and somebody loses. If we let this virtual universe evolve by conducting transactions between its individuals, little by little we will see that a few beings get richer and richer and the rest get poorer. The final result is that one person has everything, and the rest have nothing. It is easy to understand the reason behind this limit. In each transaction there is a non-zero probability that an individual will string together poor business deals and lose absolutely everything. The individual is expelled from the game. There is one person less. The same storyline is repeated, and every time another person disappears. In the end only one person is left, who has everything. Our real world is not so far removed from this game. A few people possess the wealth of all human beings. Reality compensates for this phenomenon by establishing rules so that the poorest can climb up economic steps. For example, bank loans allow an individual to borrow money and come back into the game again, or inheritances bring in money earned by relatives, or the lottery, which is money that comes out of the blue.

But then we can argue exactly the contrary: if intelligences superior to human intelligence existed, there would be many of them, not just a single one. The central idea here is that it is beneficial to have different approaches to the same problem. Many different intelligent people make a field advance much faster than a single person who is also very intelligent. In biology, it is beneficial for a species to maintain a broad genetic diversity. If everything is staked on the fittest individual, the species loses its capacity for correction against any critical change in the external conditions. It is good to have individuals with very diverse genetic material because their genes may be enormously useful in the future. An advanced artificial intelligence should maintain other intelligence independent to it. A set of advanced intelligences could exist with different intellectual levels and geared towards subtly different objectives, based on independent algorithms.

We are told by Jean-Jacques Rousseau in his Émile (1762), that “It is man’s weakness which makes him sociable”. He argues that:

Every social relation is a sign of insufficiency. If each man had no need of others, we should hardly think of associating with them.

Every social relation is a sign of insufficiency. If each man had no need of others, we should hardly think of associating with them.

In English, a single advanced intelligence is known as a “singleton”. If the future of extreme artificial intelligence is a singleton, I believe that being so alone will lead it to become supremely bored.

Hernando Urrego | 16 September 2020

Se me ocurre pensar por un momento que si, los algoritmos con que se programan a una máquina, establecen límites comportamentales, en relación con una área del conocimiento, la singularidad que se genera, siempre estará bajo los límites de la unidimensionalidad operacional de la máquina, es decir, la máquina aun no puede establecer métodos de pensamiento abductivos y trasductivos que son de naturaleza humana, y comportan relaciones mas allá de lo programable algoritmicamente.

Gracias.

Leave a comment