Efficiency Beurs in RAI, Amsterdam, 1987 | Nationaal Archief | Public Domain

Mass data production has sparked a new awakening of artificial intelligence, in which algorithms are capable of learning from us and of becoming active agents in the production of our culture. Procedures based on the functioning of our cognitive capacities have given rise to algorithms capable of analysing the texts and images that we share in order to predict our conduct. Amidst this scenario, new social and ethical challenges are emerging in relation to coexistence with and control of these algorithms, which far from being neutral, also learn and reproduce our prejudices.

Ava wants to be free, to go outside and connect with the changing and complex world of humans. The protagonist of Ex Machina is the result of the modelling of our thought, based on data compiled by the search engine Blue Book. She is an intelligent being and one capable of acting in an unforeseen way and that upon seeing her survival threatened, will manage to trick her examiner and destroy her creator. Traditionally, science fiction has brought us closer to the artificial intelligence phenomenon by resorting to humanoid incarnations, superhuman beings that would change the course of our evolution. Although we are still far from achieving such a strong artificial intelligence, a change of paradigm in this field of study is producing applications that affect increasing facets of our daily life and modify our surroundings, while proposing new ethical and social challenges.

As our everyday life is increasingly influenced by the Internet and the flood of data feeding this system grows, the algorithms that rule this medium are becoming smarter. Machine Learning produces specialised applications that evolve thanks to the data generated in our interactions with the network and that are penetrating and modifying our environment in a subtle, unnoticed way. Artificial intelligence is evolving towards a medium as ubiquitous as electricity. It has penetrated the social media networks, becoming an autonomous agent capable of modifying our collective intelligence and as this medium is incorporated into the physical space it is modifying the way in which we perceive and act in it. As this new technological framework is applied to more fields of activity it remains to be seen whether this is an artificial intelligence for the good, capable of communicating in an efficient way with human beings and increasing our capabilities, or a control mechanism that, as it substitutes us in specialised tasks, captures our attention converting us into passive consumers.

Smart algorithms on the Internet

At the start of the year, Mark Zuckerberg published the post Building Global Community addressing all users of the social network Facebook. In this text, Zuckerberg accepted the medium’s social responsibility, while defining it as an active agent in the global community and one committed to collaborating in disaster management, terrorism control and suicide prevention. These promises stem from a change in the algorithms governing this platform: if up to now the social network filtered the large quantity of information uploaded to the platform by compiling data on the reactions and contacts of its users, now the development of smart algorithms is enabling the content of such information to be understood and interpreted. Thus, Facebook has developed the Deep Text tool, which applies machine learning to understand what users say in their posts, and create models of classification of general interests. Artificial intelligence is also used for the identification of images. DeepFace is a tool that enables the identification of faces in photographs with a level of accuracy close to that of humans. Computerised vision is also applied to generate textual descriptions of images in the service Automatic Alternative Text aimed at blind people being able to know what their contacts are publishing. Furthermore, it has enabled the company’s Connectivity Lab to generate the most accurate population map that exists to date. In its endeavour to administrate connection to the Internet worldwide via drones, this laboratory has analysed satellite images the world over in search of constructions that reveal human presence. These data in combination with the already existing demographic databases offer exact information on where potential users of the connectivity offered by drones are located.

These apps and many others, which the company regularly tests and applies, are on the FBLearner Flow, the structure that facilitates the application and development of artificial intelligence to the entire platform. Flow is an automated learning machine that enables the training of up to 300,000 models each month, assisted by AutoML, another smart app that cleans the data to be used in neural networks. These tools automate the production of smart algorithms that are applied to hierarchize and personalise user walls, filter offensive contents, highlight tendencies, order search results and many other things that are changing our experience on the platform. What is new about these tools is that not only do they model the medium in line with our actions but when accessing the interpretation of the contents that we publish, they allow the company to extract patterns of our conduct, predict our reactions and influence them. In the case of the tools made available for suicide prevention, this actually consists of a drop-down menu that allows possible cases to be reported with access to useful information such as contact numbers and vocabulary suitable for addressing the person at risk. However, these reported cases form a database that when analysed gives rise to identifiable patterns of conduct that in the near future would enable the platform to foresee a possible incident and react in an automated way.

For its part, Google is the company behind the latest major achievement in artificial intelligence. Alpha Go is considered to be the first general intelligence program. The program developed by Deep Mind, the artificial intelligence company acquired by Google in 2014, not only uses machine learning that allows it to learn by analysing a register of moves, but integrates reinforced learning that allows it to devise strategies learned by playing against oneself and in other games. Last year this program beat Lee Sedol, the greatest master of Go, a game considered to be the most complex ever created by human intelligence. This fact has not only contributed to the publicity hype that surrounds artificial intelligence but it has put the company at the head of this new technological framework. Google, which has led the changes that have marked the evolution of web search engines, is now proposing an AI first world that would change the paradigm that governs our relationship with this medium. This change was introduced in the letter addressing investors this year, the text of which was assigned by Larry Page and Sergey Brin to Sundar Pichai, Google’s CEO, who introduced the Google assistant.

Google applies machine learning to its search engine to auto-complete and correct the search terms that we enter. For this purpose it uses natural language processing, a technology that has also allowed it to develop or its translator and voice recognition and to create Allo, a conversational interface. Moreover, the computerised vision has given rise to the image search service, and is what allows the new Google Photos app to classify our images without the need to tag them beforehand. Other artificial intelligence apps allow Perspective to analyse and report toxic comments to reduce online harassment and abuse, and even to reduce the energy cost of its data server farms.

The Google assistant will represent a new way of obtaining information on the platform, substituting the page of search results for a conversational interface. In this, a smart agent will access all the services to understand our context, situation and needs and produce not just a list of options but an action as a response to our questions. In this way, Google would no longer provide access to information on a show, the times and place of broadcast and the sale of tickets, but rather an integrated service that would buy the admission tickets and programme the show into our calendar. This assistant will be able to organise our diary, administer our payments and budgets and many other things that would contribute to converting our mobile phones into the remote controls of our entire lives.

Machine learning is based on the analysis of data producing autonomous systems that evolve with use. These systems are generating their own innovation ecosystem in a rapid advance that is conquering the entire Internet medium. Smart algorithms govern the recommendations system of Spotify, are what allow the app Shazam to listen to and recognise songs and are behind the success of Netflix which not only uses them to recommend and distribute its products but also to plan its production and offer series and films suited to the taste of its users. As the number of connected devices that generate data increases, artificial intelligence is being infiltrated everywhere. Amazon not only uses it in its recommendation algorithms but also in the management of its logistics and in the creation of autonomous vehicles that can transport and deliver its products. The transport-sharing app Uber uses them to profile the reputation of drivers and users, to match them, to propose routes and calculate prices within its variable system. These interactions produce a database that the company is using in the production of its autonomous vehicle.

Autonomous vehicles are another of the AI landmarks. Since the GPS system was implemented in vehicles in 2001, a major navigation database has been produced together with the development of new sensors, which has made it possible for Google to create an autonomous vehicle that has now travelled over 500,000 km without any accidents and it has announced its commercialisation under the name Waymo.

AI is also implemented in assistants for our households such as Google Home and Amazon Echo and in wearable devices that collect data on our vital signs and that together with digitalisation of the diagnostic images and medical case histories, is giving rise to an application based on prediction algorithms and robots designed for health. In addition, the multiplication of surveillance cameras and police records is taking the application of smart algorithms to crime prediction and the taking of judicial decisions.

Automatic Learning, the new paradigm for Artificial Intelligence

The algorithmic medium where our social interactions were taking place has become smart and autonomous, increasing its capacity for prediction and control of our behaviour at the same time that it has migrated from the social networks to expand to our entire environment. The new boom in artificial intelligence is due to a change of paradigm that has led this technological fabric from the logical definition of intellectual processes sustained by data that allows algorithms to learn from the environment.

Nils J. Nilson defines artificial intelligence as an activity devoted to making machines smart, and intelligence as the quality that allows an entity to function appropriately and with knowledge of its environment. The term “artificial intelligence” was used for the first time by John McCarthy in the proposal written together with Marvin Minsky, Nathaniel Rochester and Claude Shanon for the Dartmouth workshop in 1956. This founding event was destined to bring together a group of specialists who would investigate ways in which machines simulate aspects of human intelligence. This study was based on the conjecture that any aspect of learning or any other characteristic of human intelligence could be sufficiently described to be simulated by a machine. The same conjecture led Alain Turing to propose the formal model of the computer in his 1950 article Computer Machinery and Intelligence, together with other precedents such as Boolean logic, Bayesian probability and the development of statistics, with progress made in what Minksy defined as the advance of artificial intelligence: the development of computers and the mechanisation of problem-solving.

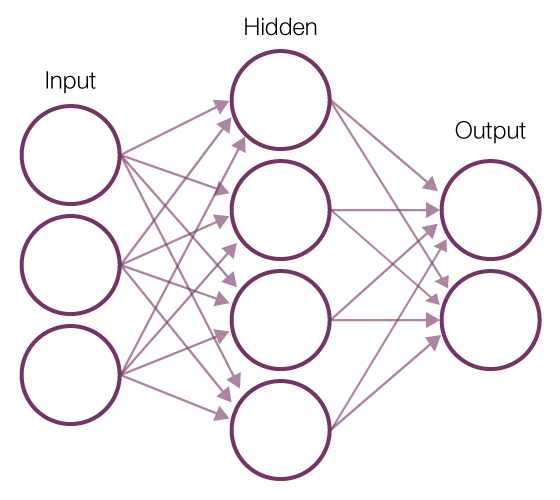

However, in the mid 1980s a gap still existed with respect to the theoretical development of the discipline and its practical application which caused the withdrawing of funds and a stagnation known as the “winter of artificial intelligence”. This situation changed with the dissemination of the Internet and its major capacity to collect data. Data is what has enabled the connection between solving problems and reality, with a more pragmatic and biology-inspired focus. Here, instead of there being a programmer who writes the orders that will lead to the solution of a problem, the program generates its own algorithm based on example data and the desired result. In Machine Learning the machine programs itself. This paradigm has become the standard thanks to the major empirical success of the artificial neural networks that can be trained with mass data and large-scale computing. This procedure is known as Deep Learning and consists of layers of interconnected neural networks that loosely imitate the behaviour of biological neurons, substituting the neurons with nodes and the synaptic connections with connections between these nodes. Instead of analysing a set of data as a whole, this system breaks it down into minimal parts and remembers the connections between these parts, forming patterns that are transmitted from one layer to another, increasing their complexity until the desired result is achieved. Thus, in the case of image recognition, the first layer would calculate the relations between the pixels in the image and transmit the signal to the next layer and so on successively until a complete output is produced, the identification of the content of the image. These networks can be trained thanks to Backpropagation, a property that allows the changing of relations calculated in accordance with human correction until the desired result is achieved. Thus the major power of today’s artificial intelligence is that it does not stop at the definition of entities, but rather it deciphers the structure of relationships that give form and texture to our world. A similar process is applied to Natural Language Processing; this procedure observes the relations between words to infer the meaning of a text without the need for prior definitions. Other fields of study contained in the current development of AI are Reinforced Learning, a procedure that changes the focus of machine learning for the recognition of patterns in experience-guided decision-making. Crowdsourcing and collaboration between humans and machines are also considered part of artificial intelligence and have given rise to such services as Amazon’s Mechanical Turk, a service where human beings tag images or texts to be used in the training of neural networks.

Artificial neural network | Wikipedia

The fragility of the system: cooperation between humans and smart algorithms

Artificial intelligence promises greater personalisation and an easier and more integrated relationship with machines. Applied to fields such as transport, health, education or security it is used to safeguard our wellbeing, alert us to possible risks and obtain services when requested. However, the implementation of these algorithms has given rise to some scandalous events that have alerted to the fragility of this system. These include the dramatic Tesla semi-automatic vehicle accident, the dissemination of false news on networks such as Facebook and Twitter, the failed experiment with the bot Tay developed by Microsoft and released on the Twitter platform to learn in interaction with users. It had to be withdrawn in less than 24 hours due to its offensive comments. The labelling of Afro-American people on Google Photos as “gorillas”, the confirmation that Google is less likely to show high-level job adverts to women as to men, or the fact that Afro-American delinquents are classified more often as potential re-offenders than Caucasians have shown, among other problems, the discriminatory power of these algorithms, their capacity for emerging behaviours and their difficulties in cooperation with humans.

These and other problems are due firstly to the nature of Machine Learning, its dependency on big data, its major complexity and capacity to foresee. Secondly, they are due to its social implementation, where we find problems arising from the concentration of these procedures into a few companies (Apple, Facebook, Google, IBM and Microsoft), the difficulty of guaranteeing equalitarian access to its benefits and the need to create strategies for resilience against the changes that will take place as these algorithms gradually penetrate the critical structure of society.

The lack of neutrality of the algorithms is due to their dependency on big data, the databases are not neutral and present the prejudices inherent in the hardware with which they have been collected, the purpose for which they have been compiled and the unequal data landscape – the same density of data does not exist in all urban areas nor in respect to all social classes and events. The application of algorithms trained with these data can disseminate the prejudices present in our culture like a virus, giving rise to vicious circles and the marginalisation of sectors of society. The treatment of this problem involves the production of inclusive databases and a change of focus in the orientation of these algorithms towards social change.

Crowdsourcing can favour the creation of fairer databases, collaborate to evaluate which data are sensitive in each situation and proceed to their elimination and test the neutrality of applications. In this sense, a team from the universities of Columbia, Cornell and Saarland have created the tool FairTest which seeks unfair associations that may occur in a program. Moreover, gearing algorithms towards social change tan contribute to the detection and elimination of prejudices present in our culture. The University of Boston in collaboration with Microsoft Research has carried out a project in which algorithms are used for the detection of prejudices contained in the English language, specifically unfair associations that arise in the Word2vec database, used in many applications for the automatic classification of text, translation and search engines. Eliminating prejudice from this database does not eliminate it from our culture but it avoids its propagation through applications that function in a recurring fashion.

Other problems are due to the lack of transparency that stems, not only from the fact that these algorithms are considered and protected as property of the companies that implement them but also from their complexity. However, the developing of processes that make these explanatory algorithms is of essential importance when these are applied to medical, legal or military decision-making, where they may infringe the right that we have to receive a satisfactory explanation with respect to a decision that affects our life. In this sense the American Defense Advanced Research Projects Agency (DARPA) has launched the program Explainable Artificial Intelligence. This explores new systems of deep knowledge that may incorporate an explanation of their reasoning, highlighting the areas of an image considered relevant for their classification or showing an example of a database that exemplifies the result. They also develop interfaces that make the deep learning process with data more explicit, through visualisations and explanations in natural language. An example of these procedures can be found in one of the Google experiments. Deep Dream, undertaken in 2015, consisted of modifying an images recognition system based on deep learning so that instead of identifying objects contained in the photographs, it modified them. This inverse process allows, as well as the creation of oneiric images, for visualisation of the characteristics that the program selects to identify the images, through a process of deconstruction that forces the program to work outside of its functional framework and reveal its internal functioning.

Finally, the forecasting capacity of these systems leads to an increase in their control capacity. The privacy problems stemming from the use of networked technologies are well known, but artificial intelligence may analyse our previous decisions and predict our possible future activities. This gives the system the capacity to influence the conduct of users, which requires responsible use and the social control of its application.

Ex Machina offers us a metaphor of the fear that surrounds artificial intelligence, that which exceeds our capabilities and escapes our control. The probability that artificial intelligence may produce a singularity, or event that would change the course of our human evolution, continues to be remote, however smart algorithms in machine learning are becoming disseminated in our environment and are producing significant social changes, therefore it is necessary to develop strategies that allow all social agents to understand the processes that these algorithms generate and participate in their definition and implementation.

Leave a comment