Security screening at the Clinton Engineer Works. Lie detector test. Oak Ridge, USA, c. 1945 | Wikimedia Commons | Public domain

The US elections showcased post-truth politics. The impact of false news on the results not only demonstrates the social influence of the Internet, it also highlights the misinformation that exists there. The rise of this phenomenon is also closely linked to the role of the social networks as a point of access to the Internet. Face with this situation, the solutions lie in diversifying the control of information, artificial intelligence, and digital literacy.

If you’re in the States, it’s difficult to escape the huge media phenomenon of the electoral process. It’s a phenomenon that floods the traditional media and overflows into the social networks. In mid-2016, Bernie Sanders was still favourite in progressive circles, but Donald Trump had already become a media boom. On 8 November 2016, Trump emerged from the hullaballoo created by satirical memes, hoaxes, clickbait and false news to be elected as president of the United States, being invested on 20 January 2017 amid controversy arising from misinformation about attendance of the event and mass protests headed by the women’s march.

Meanwhile, on 10 November, Mark Zuckerberg, at the Techonomy Conference in Half Moon Bay, tried to exculpate his social network, Facebook, from participation in the spread of fake news and its possible influence on the election results. The entry of this platform into the media arena, materialised in its Trending Topics (only available in English-speaking countries) and reinforced by the fact that an increasingly large number of citizens go to the Internet for their news, has become the centre of a controversy that questions the supposed neutrality of digital platforms. Their definition as technological media where the contents are generated by users and editorialised by neutral algorithms has been overshadowed by evidence of the lack of transparency in the functionality of these algorithms, partisan participation of human beings in censorship, and content injection in trending news and user walls. This led Zuckerberg to redefine his platform as a space for public discourse and accept its responsibility as an agent involved in this discourse by implementing new measures. These include the adoption of a content publication policy including non-partisanship, trustworthiness, transparency of sources, and commitment to corrections; the development of tools to report fake or misrepresentative contents; and the use of external fact-checking services, such as Snopes, FactCheck.org, Politifact, ABCNews and AP, all signatories of Poynter’s International Fact-Checking Network fact-checkers’ code of principles. At the same time, other technological giants like Google and Twitter have developed policies to eliminate fake news (Google eliminated some 1.7 billion ads violating its policy in 2016, more than twice the previous year) and combat misuse of the Internet.

Fake news, invented for ulterior gain, which makes the rounds of the Internet in the form of spam, jokes and post bait, is now at the centre of the controversy surrounding the US electoral process as an example of post-truth politics facilitated by the use of the social networks, but it is also a symptom that the Internet is sick.

In its Internet Health Report, Mozilla points to the centralisation of a few big companies as one of the factors that encourage the lack of neutrality and diversity, as well as the lack of literacy in this medium. Facebook is not just one of the most used social networks, with 1.7 billion users, it is also the principal point of entry to the Internet for many, while Google monopolises searches. These media have evolved from the first suppliers of services and the advent of the 2.0 web, creating a structure of services based on the metric of attractiveness. “Giving the people what they want” has justified the monitoring of users and algorithmic control of the resulting data. It has also created a relation in which users depend on the tools that these big providers offer, ready for use, without realising the cost of easy access in terms of the centralisation, invasive surveillance and influence that these big companies have on the control of information flows.

The phenomenon of misinformation on the Internet stems from the fact that the medium gives fake or low-quality information the same capacity to go viral as a true piece of news. This phenomenon is inherent in the structure of the medium, and is reinforced by the economic model of pay per click—exemplified by Google’s advertising service—and the creation of filter bubbles by the algorithmic administration of social networks like Facebook. In this way, on the Internet, fake news is profitable and tends to reaffirm our position within a community.

Services like AdSense by Google encourage websites developers to generate attractive, indexable content to increase visibility and raise the cost per click. Unfortunately, sensationalist falsehoods can be extremely attractive. Verifying this fact in Google analytics is what led a group of Macedonian adolescents to become promoters of Trump’s campaign. In the small town of Veles, over 100 websites sprang up with misleading names such as DonaldTrumpNews.co or USConservativeToday.com, devoted to spreading fake news about the campaign to attract traffic to pages of adverts for economic gain. The biggest source for directing traffic to these webs turned out to be Facebook, where, according to a study carried out by the Universities of New York and Stanford, fake news was shared up to 30 million times.

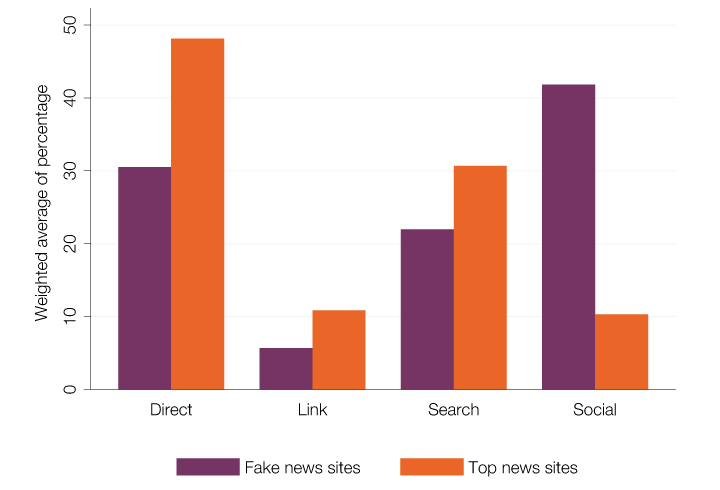

This figure presents the share of traffic from different sources for the top 690 U.S. news websites and for 65 fake news websites. Sites are weighted by number of monthly visits. Data are from Alexa. | Social Media and Fake News in the 2016 Election, Hunt Allcott and Matthew Gentzkow

The traffic of falsehoods on the social networks is encouraged by social and psychological factors—the decrease in attention that occurs in environments where information is dense and the fact that we are likely uncritically to share content that comes from our friends—but it is largely due to the algorithmic filtering conducted on these platforms. Facebook frees us from excess information and redundancy by filtering the contents that appear on our walls, in keeping with our preferences and proximity to our contacts. In this way, it encloses us in bubbles that keep us away from diversity of viewpoints and the controversies they generate, and give meaning to the facts. This filtering produces homophilous sorting, with likeminded users forming clusters that are reinforced as they share information that is unlikely to leap from one cluster to another, subjecting users to a low level of entropy, or to offer information that brings new and different viewpoints. These bubbles are like echo chambers, generating narratives that can reach beyond the Internet and have effects on our culture and society. The Wall Street Journal has published an app based on researched carried out with Facebook, allowing you simultaneously to follow the narratives generated by the red (conservative) bubble and the blue (liberal) feed. This polarisation, while limiting our perception, makes us identifiable targets, susceptible to manipulation by manufactured news.

Technology is part of the problem; it remains to be seen whether it can also be part of the solution. Artificial intelligence can’t decide whether an item of news is true or false—a complex, arduous task even for an expert human being. But tools based on machine-learning and textual analysis can help to analyse the context and more quickly identify information that needs checking.

The Fake News Challenge is an initiative in which different teams compete to create tools that help human fact-checkers. The first phase of this competition is based on stance detection. Analysis of the language contained in a news item can help to classify it according to whether it is for, against, discusses or is neutral in relation to the fact indicated in the headline. This automatic classification allows a human checker rapidly to access a lists of articles related to an event and examine the arguments for and against.

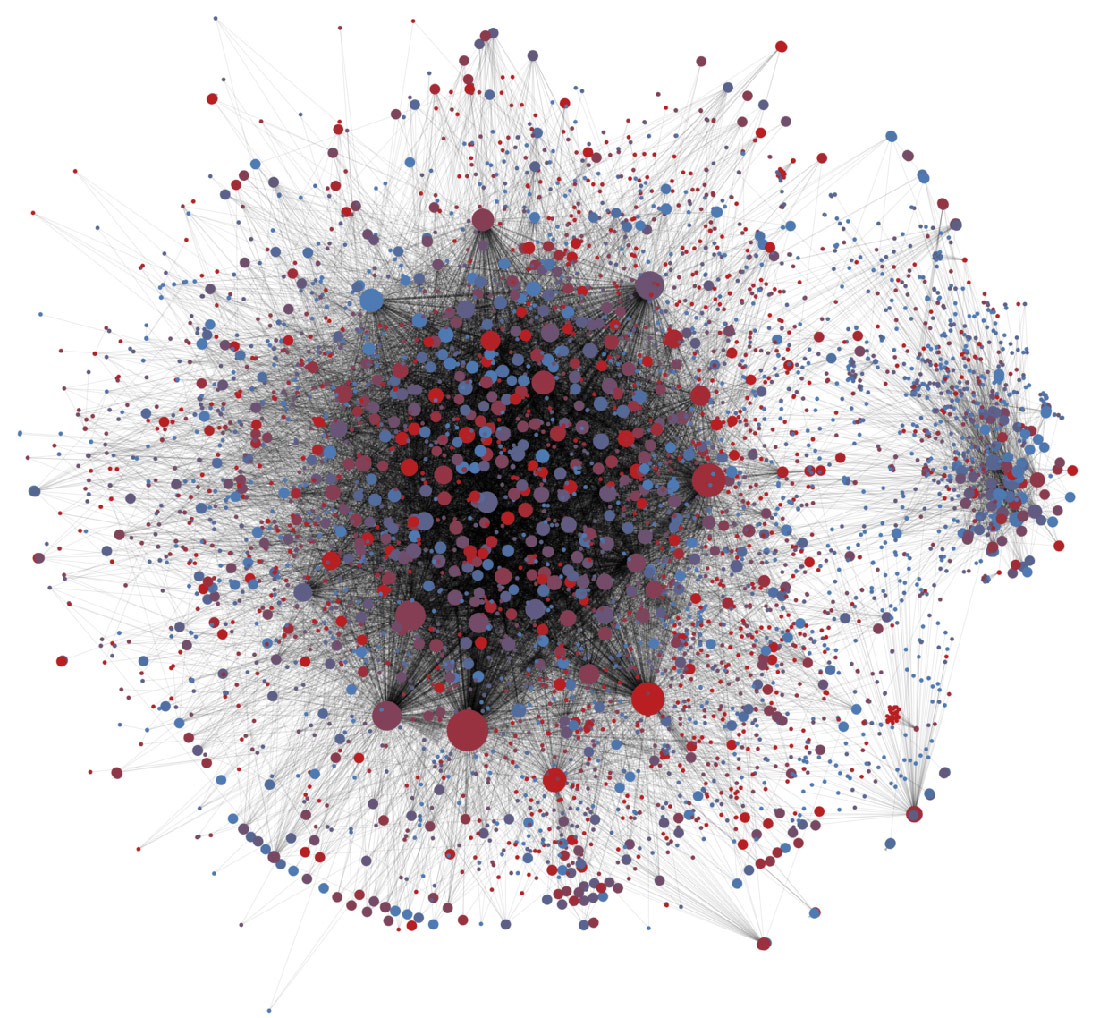

Apart from language analysis, another computational procedure that helps to analyse a news context is network analytics. OSoMe, the observatory of social media developed by the Center for Complex Networks and Systems Research of the University of Indiana and directed by Fil Menczer, proposes a series of tools to analyse how information moves in the social networks in search of patterns that serve to identify how political polarisation occurs and how fake news is transmitted, as well as helping automatically to identify it.

In this visualization of the spread of the #SB277 hashtag about a California vaccination law, dots are Twitter accounts posting using that hashtag, and lines between them show retweeting of hashtagged posts. Larger dots are accounts that are retweeted more. Red dots are likely bots; blue ones are likely humans. | Onur Varol | CC BY-ND

One of these tools is Hoaxy, a platform created to monitor the spread of fake news and its debunking on Twitter. The platform tracks the instances and retweets of URLs with fake facts reported by fact-checking to see how they are distributed online. Preliminary analysis shows that fake news is more abundant than its debunking, that it precedes fact-checking by 10-20 hours, and that it is propagated by a small number of very active users, whereas debunking is distributed more uniformly.

As for the automated detection of fake news, network analytics use knowledge graphs. This technique makes it possible to employ knowledge that is generated and verified collectively, as in the case of Wikipedia, to check new facts. A knowledge graph will contain all the relations between the entities referred to in this collaborative encyclopaedia, representing the sentences so that the subject and the object constitute nodes linked by their predicate, forming a network. In this way, the accuracy of a new sentence can be determined in the graph, being greater when the path linking subject and object is sufficiently short, without excessively general nodes.

Other tools that use computational means to track the spreading of information and enable checking based on textual content, the reputation of its sources, its trajectory, and so on, are RumorLens, FactWatcher, Twitter Trails and Emergent.info, implemented in the form of applications or bots. Particular mention should be made of the collaborative tool provided by Ushahid Swift River that uses metaphors such as river (information flow), channels (sources), droplets (facts) and bucket (data that is filtered or added by the user) in an application designed to track and filter facts in real time and collectively create meaning. Here, a user can select a series of channels—Twitter or RSS—to establish a flow of information that can be shared and filtered according to keywords, dates or location, with the possibility of commenting and adding data.

The proliferation of Internet use has led to a post-digital society in which connectedness is a constituent part of our identities and surroundings, and where everything that happens on line has consequences in real cultural and social contexts. This proliferation has occurred alongside what the Mozilla Foundation calls the veiled crisis of the digital age. The simplification of tools and software and their centralisation in the hands of technological giants foster ignorance about the mechanisms governing the medium, promoting passive users who are unaware of their participation in this ecology. Internet has brought about a change in the production of information, which no longer comes from the authority of a few institutions, but is instead created in a collective process. The informed adoption of these and other tools could help to reveal the mechanisms that produce, distribute and evaluate information, and contribute to digital and information literacy—the formation of critical thinking that makes us active participants who are responsible for the creation of knowledge in an ecology that is enriched by the participation of new voices, and where sharing is caring.

Alfred | 24 August 2017

No hi ha bilions d’ésser humans, i per tant no poder ser-hi d’usuaris.

Leave a comment