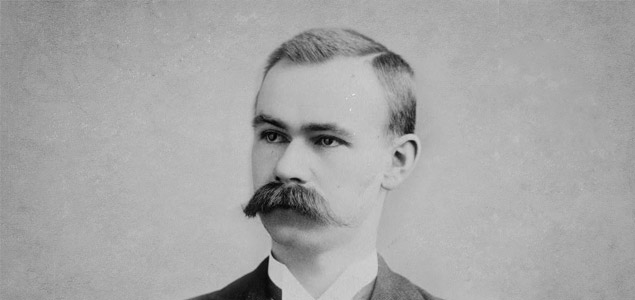

Herman Hollerith (1860-1929), the inventor of a machine that used punched cards to rapidly process millions of pieces of data and the founder of the Tabulating Machine Co., which later merged to become IBM. Source: Library of Congress.

The last decade has seen some fundamental truths take root, or at least become widespread in the community, regarding the status of information as a raw material and its role in the world. Namely:

- The amount of data that we are able to produce, transmit, and store is growing at an unprecedented rate.

- Given that it is increasingly cheap and easy to store data, it is worth storing it by default.

- This bulk information contains large pockets of valuable knowledge that can be extracted. But only if we can ‘read’ it of course, and this gets more difficult as volumes get larger. The more data we have, the more we are forced to develop new ways of interpreting it.

- The extreme ease with which organisations can now generate data is offset by the anxiety that comes from the sense that they are letting the value buried within it slip away. That they are unable to separate the wheat from the chaff and extract every last grain.

Science, business and government organisations have developed a substantial technological infrastructure to capture and store as much data as possible at each stage of their operations. They invest in new fields of knowledge – the new Data Science – and emergent professions ranging from ‘data scientists’ to data analysts and information visualisation experts. But the fact remains that most large organisations are constantly overwhelmed in their attempts to control the information they produce. As a US military official put it, “we are swimming in sensors and drowning in data.”

This situation is typical of the age of the Data Deluge.

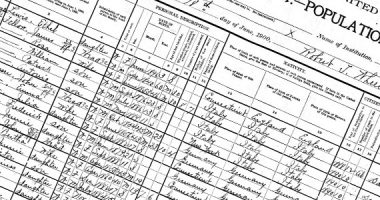

The idea that we are living in the aftershock of an enormous information explosion is hardly new. The sense that the data we are able to produce keeps increasing until it reaches unmanageable levels appeared just as IT became a real industry, implemented in an ever increasing number of administrative processes. The first recorded use of the term ‘information explosion’ was in an academic journal in 1961, followed almost immediately by an IBM ad in the New York Times. Today’s slightly less dramatic but equally sensationalist version is the data ‘deluge’ or ‘tsunami’, terms that became common usage among the academic community and the financial press midway through last decade.

Probably the most technical of the many ways of expressing the vertigo produced by the data explosion is Kryder’s Law. While Moore’s Law has been able to more or less correctly predict the rate of increase of the processing power of computers – doubling every 18 months –, Kryder’s Law tries to accurately predict our even faster-growing capacity to store ever-larger amounts of digital data in a limited space.

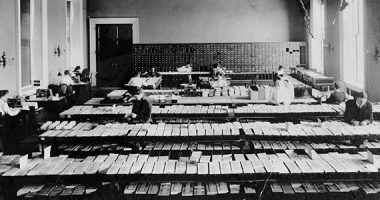

From our vantage point in 2014, this photograph probably more effectively illustrates the size and nature of the deluge. Fifty years ago, a hard drive was a huge piece of equipment the size of a small vehicle, with a memory that was able to store the equivalent of an MP3 song; a preposterously small part of the storage capacity of the mobile phone in your pocket or handbag.

The journey from paper tape and perforated cards – the first computer storage systems in the 1940s – to today’s USB flash drives and mini SD cards is another very eloquent expression of Kryder’s Law. This vertigo is best understood as the tension between a shrinking physical medium and a storage capacity that expands endlessly.

Now that our personal experience and life stories are being encoded in these magnetic and optical storage devices, their long-term preservation is an increasingly urgent matter. If our collective history is stored in the thousands of Data Centres scattered throughout the globe, these may come to play an archival rather than simply operational role. Even so, we have not come up with a strategy to ensure that this information remains accessible and useable in the future. Deals such as one that allows the US Library of Congress to archive a copy of all the messages sent on Twitter seem to guarantee that some important data will be saved. But every time a startup dies, or a small service is taken over by an internet giant and allowed to wither away, the data that it generated and preserves can disappear for ever, instantly, without trace.

The most important of the new archival institutions that have stepped in to fill this void is probably Brewster Kahle’s Internet Archive. Since 1996, the Internet Archive systematically crawls and ‘photographs’ the Web to store snapshots of what the Internet was like on a particular day in a particular year, defying its essentially unstable and changeable nature. This beautiful film by Jonathan Minard shows the physical infrastructure – housed in a former church – that makes the Internet Archive possible. A fire recently threatened this valuable heritage, although fortunately it only affected the area in which print books are scanned.

The Data Deluge does not show any signs of easing off, and Kryder’s Law is likely to continue to prevail. Perhaps the next step, the leap that will take us to another level, will be found in the medium that nature uses to store its data.

The European Bioinformatics Institute in Cambridge – Europe’s largest database of genome sequences – stores highly sensitive digital information that must be conserved and remain accessible for many decades. Hard drives, which require cooling and have to be replaced on a regular basis, are far from ideal for the task of storing the code of life. Hard drive technology is not nearly as sophisticated as the DNA itself.

This paradox did not go unnoticed by the zoologist and mathematician Nick Goldman, one of the ‘librarians of life’ who looks after database maintenance at the EBI: our storage media are fragile, take up space, and require maintenance, while DNA can store enormous amounts of data in a tiny space, for millions of years. In 2013, Goldman and his team announced that they had managed to transfer a modest 739 kilobytes of data to a DNA chain. Later, a computer managed to decode it and read its contents: Shakespeare’s 154 sonnets, an academic article, a photo of the researchers’ laboratory, 26 seconds of Martin Luther King’s most famous speech, and a software algorithm. It is still early days, but the EBI team has high hopes for the technique that they have developed: their long-term goal is to store the equivalent of a million CDs on a gram of DNA, for at least 10,000 years.

Leave a comment